The way we use technology to construct products and services is constantly evolving, at a rate that is difficult to comprehend. Regrettably, the predominant approach used to secure design methodology is preventative, which means we are designing stateful security in a stateless world. The way we design, implement, and instrument security has not kept pace with modern product engineering techniques such as continuous delivery and complex distributed systems. We typically design security controls for Day Zero of a production release, failing to evolve the state of our controls from Day 1 to Day (N).

This problem is also rooted in the lack of feedback loops between modern software-based architectures and security controls. Iterative build practices constantly push product updates, creating immutable environments and applying complex blue-green deployments and dependencies on ever-changing third-party microservices. As a result, modern products and services are changing every day, even as security drifts into the unknown.

Security should be objective and rigorously tested, with data and measurement driving our decisions about enterprise security. The era of complex distributed systems, continuous delivery, and advanced delivery models such as blue/green deployments challenge whether traditional testing methods alone are enough. While testing can be a useful feedback mechanism for system instrumentation, testing by itself is insufficient; we should also experiment.

What is the difference between testing and experimenting? Testing seeks to assess and validate the presence of previously known system attributes. Experimentation, in contrast, seeks to derive new information about a system by utilizing the scientific method. Testing and experimentation are both excellent ways to measure the gap between how we assume the system is operating and how it is actually operating.

Security experimentation in the security engineering domain fundamentally came about through the application of chaos engineering. Chaos engineering is the discipline of experimenting on a distributed system in order to build confidence in the system’s ability to withstand turbulent conditions in production.

A common saying in chaos engineering is “Hope is not a strategy.” When I began my journey in applying chaos to security with ChaoSlingr, I began to think differently about the role of failure, both in the way we build systems and the ways we attempt to secure them. How do we typically identify the failure of security controls within the enterprise? We typically don’t recognize that something isn’t working properly until it no longer is, and this is often true with security incidents. Security incidents are not effective measures of detection, because at that point it's already too late. We must find better ways of instrumentation and monitoring if we aspire to be proactive in detecting security failures.

Image by Aaron Rinehart

Though it may remind you of Dr. Suess's classic children's book, "One Fish, Two Fish, Red Fish, Blue Fish," security testing involves much more than a crayon box full of purple, blue, and red team exercises. Rather, it is a wide discipline consisting of important techniques such as ethical hacking, threat hunting, vulnerability scanning, and more. In this article, we will discuss the importance of objective security instrumentation in general, the gaps in current security testing methodologies such as red and purple team exercises, and how the advent of security experimentation can help close these gaps.

It is not our intention to discount the value of red and purple exercises or other security testing methods but to expand on the importance of this testing and clarify an emerging technique.

Originating with the Armed Forces, red teaming has held many different definitions over the years, but it can be described today as an adversarial approach that emulates the behaviors and techniques of attackers in the most realistic way possible to test the effectiveness of a security program. Two common forms of red teaming seen in enterprise security testing are ethical hacking and penetration testing, which often involve a mix of internal and external engagement.

Historical problems: Red vs. blue team testing

- Feedback loops in red team exercises frequently consist of reports tossed over the wall, if they are shared at all

- Exercises primarily focus on malicious attackers, exploits, and complex subjective events

- Red team exercises emphasize remediation of vulnerabilities rather than prevention and detection

- Teams are incentivized by their ability to outwit the opposing side

- Red teams are often composed at least partially of outsourced groups

- Red teams and blue teams have misaligned incentives

- Success for a red team often results in (big scary report = job well done) vs. success for a blue team often results in (no alerts = preventative controls all worked!)

- Success depends on how many controls the team can bypass (blue team failure points)

- For blue teams, many alerts can be misunderstood to mean that detection capabilities are operating effectively

Purple team exercises attempt to create a more cohesive testing experience between offensive and defense security techniques by increasing transparency, education, and better feedback loops. By integrating the defensive tactics and controls from the blue team with the threats and vulnerabilities found by the red team into a single narrative, the goal is to maximize the efforts of each.

Problems with red team (RT) and purple team (PT) exercises in modern product engineering

- Many of the problems with red teaming also exist for PT exercises

- Typically performed only on a top percentage of application portfolio

- Exercises are typically done on an annual or monthly basis, or at best weekly on, for example, “Red Team Mondays,” which can be resource-intensive, even for a mature enterprise

- RT and PT exercises focus primarily on malicious or adversarial attacks and often result in some margin of success. Throughout my career, I have rarely seen one of these exercises end with a failed attempt.

- RT and PT exercises are primarily focused on malicious activity as a root cause, although there is a growing trend toward human factors

- RT and PT exercises, despite providing an objective approach to security testing, are difficult to measure given the number of system changes, attacks, exploit attempts, scans, etc. Garbage in = garbage out becomes an issue with many changes occurring in the environment. During a red team event, it becomes difficult to measure the cascading effects and associated security program effectiveness of these activities

- RT and PT exercises cannot keep pace with continuous delivery and distributed computing environments. Today's product software engineering teams can deliver multiple product updates within a 24-hour period, which outpaces the time period necessary to conduct an effective red team exercise. The relevance of results obtained in the exercise can diminish due to the fact that the system may have fundamentally changed.

- RT and PT techniques lack adequate automation capabilities to augment changes in modern design patterns. Automating the process adds significant value to any information security strategy. However, automation can also lull security personnel into a false sense of security. Increased automation can create complacency and unmanageable complexity, making it more difficult to see the "big picture."

- RT and PT exercises lack ongoing assessment of past findings to identify breakage

The root of all evil: Reflecting on the past

Before we dive into chaos engineering and its evolution into security experimentation, consider the simple question: "Where do data breaches typically come from?"

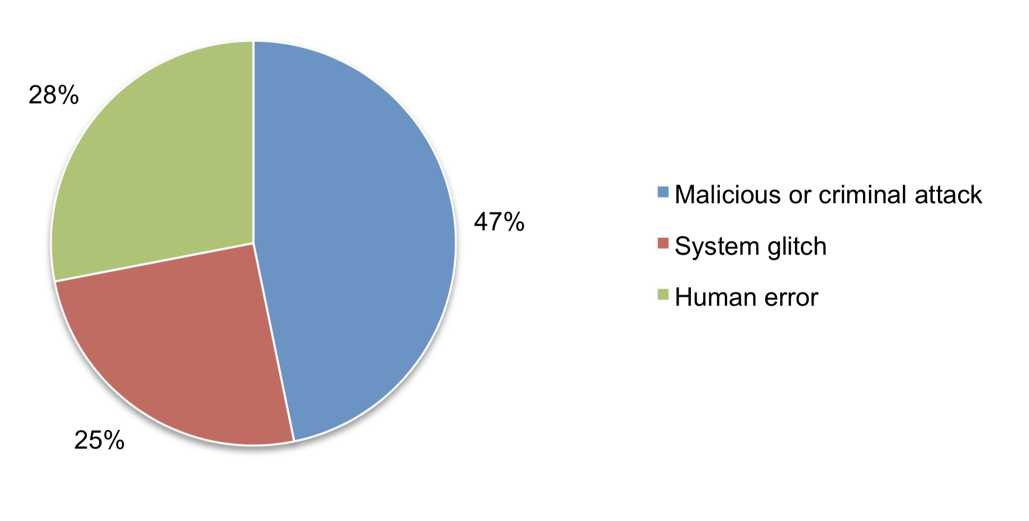

The above chart, from the Ponemon Institute’s 2017 Cost of Data Breach study, shows that malicious or criminal attacks comprised 47% of data breaches in 2017. This is the most common area of focus within the cybersecurity industry, consisting of advanced persistent threats, zero-day attacks, phishing campaigns, nation-state attacks, malware variants, etc.

The other two sections of the chart consist of human error (28%) and system glitches (25%), which together represent 53% of 2017 data breaches. The landscape of solutions and the common definition of cybersecurity largely focus on only 47% of the overall problem: malicious or adversarial attacks. Most people find malicious and criminal activities more interesting than mistakes that humans and machines make.

Human error and system glitches are the leading causes of data breaches. This is not a new trend; it has been ongoing for about eight years. Unless we start thinking differently, it unlikely to change. Why do we seem to be unable to reduce the number of security incidents and breaches every year despite spending more on security solutions? You could argue that if the cybersecurity industry had focused more on human factors and system failures, many malicious activities would have failed. For example, had there not been a misconfiguration gap in security control coverage or a failed security technical solution, many malicious or criminal attacks would not have been possible. However, it's difficult to prove this argument due to the lack of consistently reliable information regarding the relationship between malicious attacks and human factors or system glitches as the underlying root cause.

Failure: A weapon to destroy or a tool to build?

Image by WikiMedia Commons

At the heart of human error and system glitches is understanding the role of failure in how we build, operate, and improve our systems. In fact, failure is also an important building block of human life, as it is how we learn and grow. It would also be naïve to think that the way we build systems is perfect, given that “to err is human.”

Failure happens

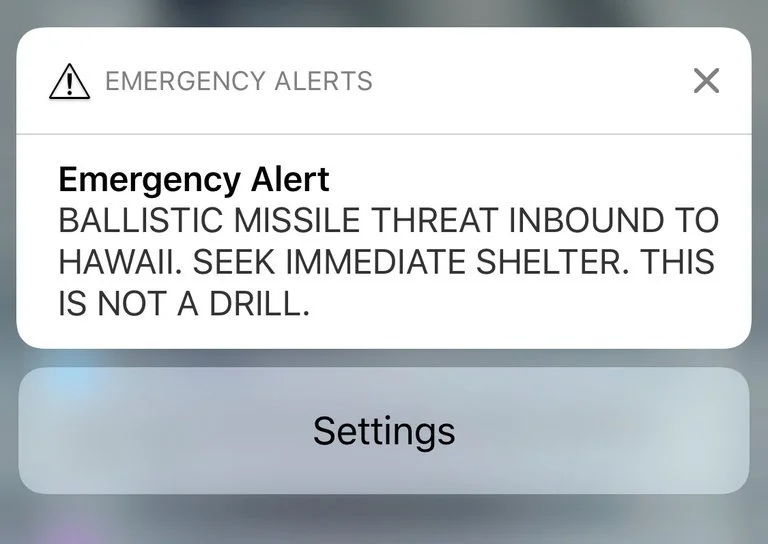

At 8:07am on January 13, 2018, a false ballistic missile alert was issued via the Emergency Alert System and Commercial Mobile Alert System over television, radio, and cellphones in the state of Hawaii, warning of an incoming ballistic missile threat and advising residents to seek shelter. The alert concluded, "This is not a drill." However, no civil defense outdoor warning sirens were authorized or sounded by the state.

Chaos engineering is rooted in the concept that failure always exists, and what we do about it is our choice. Popularized by Netflix’s Chaos Monkey, chaos engineering is an emerging discipline that seeks to proactively trigger failures intentionally in a controlled fashion to gain confidence that the system will respond to failure in the manner we intend. Recalling that “hope is not a strategy,” simply hoping your system is functioning perfectly and that it couldn’t possibly fail is not an effective or responsible strategy.

Combining security and chaos engineering

In applying chaos engineering to cybersecurity, the focus has been on identifying the relationship between how human factors and system glitches directly affect the security of the systems we build and secure. As previously stated, in the field of information security we typically learn of security or system failures only after a security incident is triggered. The incident response phase is too late; we must be more proactive in identifying failure in our security capabilities and the systems they protect.

Chaos engineering goes beyond traditional failure testing in that it’s not only about verifying assumptions. It also helps us explore the many unpredictable things that could happen and discover new properties of our inherently chaotic systems. Chaos engineering tests a system's ability to cope with real-world events such as server failures, firewall rule misconfigurations, malformed messages, degradation of key services, etc. using a series of controlled experiments. Briefly, a chaos experiment—or, for that matter, a security experiment—must follow four steps:

- Identify and define the system's normal behavior based on measurable output

- Develop a hypothesis regarding the normal steady state

- Craft an experiment based on your hypothesis and expose it to real-world events

- Test the hypothesis thoroughly by comparing the steady state and the results from the experiments

The backup always works

The backup always works; it’s the restore you have to worry about. Disaster recovery and backup/restore testing are classic examples of how the impact can be devastating if it's not performed often enough. The same goes for the rest of your security controls. Don’t wait to discover that something is not working; instead, you must proactively introduce failures into the system to ensure that your security is as effective as you think it is.

A common way to get started in security experimentation and chaos engineering is to understand the role of the gameday exercise as the primary channel used to plan, build, collaborate, execute, and conduct post-mortems for experiments. Gameday exercises typically run between two and four hours and involve a team of engineers who develop, operate, monitor, and/or secure an application. Ideally, they involve members working collaboratively from a combination of these areas.

Examples of security and chaos experiments

We cannot tell you how often major system outages are caused by misconfigured firewall rule changes. We’re willing to bet that this is a common occurrence for many others as well.

Practice makes perfect

The intent is to introduce failure in a controlled security experiment, typically during a gameday exercise, to determine how effectively your tools, techniques, and processes detected the failure, which tools provided the insights and data that led to detect it, how useful the data was in identifying the problem, and ultimately, whether the system operated as you thought it would.

The most common response from those who head down the chaos engineering path is, “Wow, I wasn’t expecting that to happen!” or “I didn’t know it worked that way.”

Chaos engineering and security experimentation both require a solid understanding of their principles and fundamentals and a focus on building maturity in the discipline. As a case in point, you would never develop an experiment and run it directly in a production environment without first testing any tools, experiments, etc. in a lower environment such as staging. Start by developing competency and confidence in the methods and tools needed to perform the experiments.

Start small and manual. If your hypothesis is true, automate the experiment

James Hamilton & Bill Hoffman, On Designing and Deploying Internet-Scale Services

- Expect failures. A component might crash or stop at any time. Dependent components might fail or stop at any time. There will be network failures. Disks will run out of space. Handle all failures gracefully.

- Keep things simple. Complexity breeds problems. Simple things are easier to get right. Avoid unnecessary dependencies. Installation should be simple. Failures on one server should have no impact on the rest of the data center.

- Automate everything. People make mistakes; people need sleep; people forget things. Automated processes are testable, fixable, and ultimately much more reliable. Automate wherever possible.

Red team (RT) and purple team (PT) vs. security experimentation

- Security experimentation has different intentions and goals than red team or purple team exercises. Its primary goal is to proactively identify security failures caused by human factors, poor design, or lack of resiliency. The goal of RT or PT exercises is to exploit weaknesses in the system.

- Security experimentation is based on simple isolated and controlled tests rather than complex attack chains involving hundreds or even thousands of changes. The purpose of these small isolated changes is to not only focus on the test but also to enable direct identification of problems. It can be difficult to control the blast radius and separate the signal from the noise when you make a large number of simultaneous changes.

- The intention of security experimentation is to build a learning culture around how your enterprise security systems work through instrumentation. While experiments often start small and are performed manually in staging environments, the goal is to build enough confidence in a given experiment to automate and run it on production systems.

- RT and PT exercises predominantly focus on the malicious attacker or adversarial approach, whereas security experimentation focuses on the role of failure and human factors in system security.

- RT/PT and security experimentation complement each other by providing real-world, actionable, and objective data about how organizations can improve their security practices.

- Security experimentation attempts to account for the complex distributed nature of modern systems. This is why changes must be simple, small in scope, automated over time, and run in production.

Want to learn more? Join the discussion by following @aaronrinehart and @AWeidenhamer on Twitter.

[See our related story, What developers need to know about security.]

Comments are closed.