If you're anything like many open source enthusiasts, you may have grown up watching science fiction shows like Knight Rider, or Star Trek, or (my personal favorite) Time Trax. What do they have in common? In each, voice is the key medium through which the protagonists interact with a computer. Knight Rider had Kitt, Star Trek had the ubiquitous computer, and even the indefatigable Darrian Lambert in Time Trax had dependable Selma, the holographic assistant.

As advances have been made in machine learning, compute power, and neural networks, voice interaction is increasingly moving from science fiction into science fact.

Disappointingly, the early leaders in this space have primarily been commercial and proprietary players—Apple with Siri, Amazon with Alexa, Microsoft with Cortana, Google with Home, Samsung with Bixby. Given their for-profit imperatives, it's no surprise that voice data is routinely recorded and stored by big companies, then used to segment audiences, profile people, and target them with advertising. Voice data, like other big data, is now a currency.

Given the widespread adoption of natural-language understanding as a user interface and the possible privacy intrusions from proprietary solutions, traction is growing for open source voice.

So, what's in an open source voice stack?

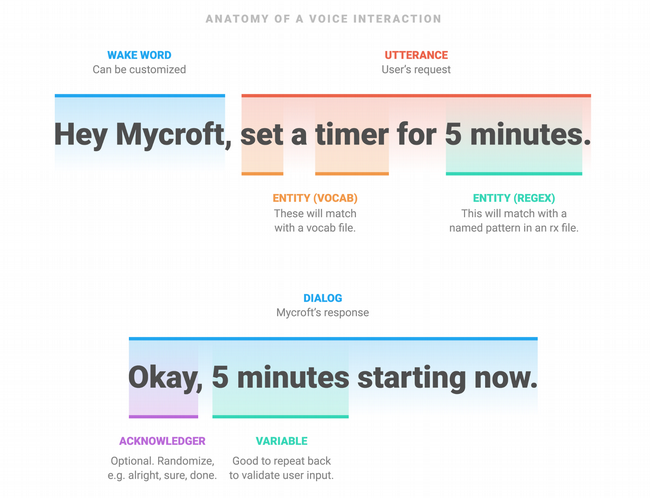

A voice interaction begins with a wake word—also called a hot word—that prepares the voice assistant to receive a command. Then a speech-to-text engine transcribes an utterance from voice sounds into written language, and an intent parser determines what type of command the user wants to execute. Then the voice stack selects a command to run and executes it. Finally, it turns written language back into voice sounds using a text-to-speech engine.

At each layer of the voice stack, several open source solutions are available.

Wake word detection

One of the earliest wake-word engines was PocketSphinx. PocketSphinx recognizes wake words based on phonemes—the smallest unit of sound that distinguishes one word from another in a language. Different languages have different phonemes—for example, it's hard to approximate the Indonesian trill "r" in English (this phoneme is the sound you make when you "roll" your "r" sound). Using phonemes for wake-word detection can be a challenge for people who are not native speakers of a language, as their pronunciation may differ from the "standard" pronunciation of a wake word.

Snowboy is another hot-word detection engine; it's available under both commercial and open source licenses. Snowboy differs from PocketSphinx in that it doesn't use phonemes for wake-word detection; instead it uses a neural network that's trained on both false and true examples to differentiate between what is and isn't a wake word.

Mycroft AI's Precise wake-word engine works similarly by training a recurrent neural network to differentiate between what is and isn't a wake word.

All of these wake-word detectors work "on-device," meaning they don't need to send data to the cloud, which helps protect privacy.

Speech-to-text conversion

Accurate speech-to-text conversion is one of the most challenging parts of the open source voice stack. Kaldi is one of the most popular speech-to-text engines available, and it has several "models" to choose from. In the world of speech-to-text, a model is a neural network that has been trained on specific data sets using a specific algorithm. Kaldi has models for English, Chinese, and some other languages. One of Kaldi's most attractive features is that it works on-device

Mozilla's DeepSpeech implementation—along with the related Common Voice data acquisition project—aims to support a wider range of languages. Currently, DeepSpeech's compute requirements mean it can only be used as a cloud implementation—it is too "heavy" to run on-device.

One of the biggest challenges with speech-to-text is training the model. Mycroft AI has partnered with Mozilla to help train DeepSpeech. Mycroft AI passes the trained data back to Mozilla to help improve the accuracy of its models.

Intent parsing

A strong voice stack also needs to ensure that the user's intent is captured accurately. Several open source intent parsers are available. Rasa is open source and widely used in both voice assistants and chatbots.

Mycroft AI uses two intent parsers. The first, Adapt, uses a keyword-matching approach to determine a confidence score, then passes control to the skill, or command, with the highest confidence. Padatious takes a different approach, where examples of entities are provided so it can learn to recognize an entity within an utterance.

Intent collisions are one of the challenges with intent parsers. Imagine the utterance:

"Play something by The Whitlams"

Depending on what commands or skills are available, there may be more than one that can handle the intent. How does the intent parser determine which one to pass to? Mycroft AI's Common Play Framework assigns different weights to different entities, leading to a more accurate overall intent-confidence score.

Text-to-speech conversion

At the other end of the voice-interaction lifecycle is text-to-speech (TTS). Again, there are several open source TTS options available. A TTS model is usually trained by gathering recordings of language speakers, using a structured corpus or set of phrases. Machine learning techniques are applied to synthesize the recordings into a general TTS model, usually for a specific language.

MaryTTS is one of the most popular and supports several European languages. ESpeak has TTS models available for more than 20 languages, although the quality of synthesis varies considerably between languages.

Mycroft AI's Mimic TTS engine is based on the CMU Flite TTS synthesis engine and has two voices available for English. The newer Mimic 2 TTS engine is a Tacotron-based implementation, which has a less robotic, more natural-sounding voice. Mimic runs on-device, while Mimic 2, due to compute requirements, runs in the cloud. Additionally, Mycroft AI has recently released the Mimic Recording Studio, an open source, Docker-based application that allows people to make recordings that can then be trained using Mimic 2 into an individual voice.

This solves one of the many problems with TTS—having natural-sounding voices available in a range of genders, languages, and dialects.

Parting notes

An emerging range of open source voice tools is becoming available, each with its own benefits and drawbacks. One thing is for certain though—the impetus towards more mature open source voice solutions that protect privacy is here to stay.

Kathy Reid will present Beach Wreck Ignition: Challenges in open source voice at linux.conf.au, January 21-25 in Christchurch, New Zealand.

2 Comments