A Markov model (named after the mathematician Andrey Markov) is used for forecasting in systems of random change. Markov's insight is that good predictions in this context can be made from only the most recent occurrence of an event, ignoring any occurrences before the current one. The approach might be described as memoryless or history-agnostic prediction.

Markov's first example (in 1913) predicted vowel occurrences in Pushkin's poem "Eugeny Onegin." The challenge today is to find a research area in which Markov models play no role. Such models are used to study thermodynamics and statistical mechanics; bioinformatics, enzyme activity, and population dynamics; solar irradiance and wind power; price trends; speech recognition and generation; data compression and pattern recognition; reinforcement learning and gesture recognition. The list goes on and on.

This article covers the Hidden Markov Model (HMM), a refinement of the original. An introduction to HMM and the Viterbi algorithm, which is well suited for HMMs, sets up a full code example in Perl.

1. Random walks with the Markov property

Imagine a fox that is foraging for food and currently at location C (e.g., by a bush next to a stream). The previous locations on the fox's search path are P1, P2, P3, and so on. At issue is how to predict the fox's next location.

One approach would be to use the entire search history P1, P2,…, C to predict the next location. For example, already visited locations in the fox's search might be given a very low probability of being the next location on the grounds that the fox is smart enough not to repeat failed search locations. By contrast, nearby but unvisited locations (e.g., an outcropping across the stream) might be given a high probability.

A simpler approach is to assume that the fox's next location N can be predicted as accurately from the current location C alone as from the entire search history P1, P2,…, C. If so, the fox's search can be modeled as a process with the Markov property. In this example, the property means that the probability distribution for the fox's next search location depends only on the fox's current location—and not on the locations prior to it. A Markov process thus can be described as a random walk that exhibits the Markov property.

The Markov property can be expressed succinctly by using the notation of conditional probability: P(A|B) is the probability of A given B. In the fox example, the Markov property supports an equality: P(N|P1,P2,…,C) = P(N|C) where C and N are, respectively, the current and the next location.

2. HMM and the Viterbi algorithm

In the fox example, each state of interest in the process (foraging at a particular location) is, by assumption, visible to an observer: no state is hidden. In the HMM refinement, the states are hidden, but each state has one or more associated output tokens (aka observations), which are not hidden. The goal is to infer the state sequence from the observations. A classic example is the urn problem.

Imagine a hidden room that contains multiple urns, each with balls labeled B1, B2, and so on. It is unknown whether every urn contains the same balls. A robot, in secret, selects an urn and picks a random ball from it, placing a copy of the ball's label onto a conveyor belt that the observer sees. The observer thus knows the sequence of picked balls (e.g., B9, B4, B13,…) but not the urn from which a given ball is picked. After picking a ball from an urn and copying the label, the robot returns the ball to its host urn before the next pick.

A picked urn belongs to the hidden state in this process, and the copies of the ball labels placed on the conveyor belt are the output tokens. The robot has a recipe (albeit a secret one) for picking each urn; hence, this urn-picking can be modeled as a Markov process with a hidden state—an HMM.

The Viterbi algorithm, from the late 1960s, can be used to infer the likely sequence of hidden states (in this example, the sequence of urn picks) from the observed events (in this case, the labels on the conveyor belt). The inferred sequence is called the Viterbi path in Viterbi's honor.

As an example, consider this slice of the output tokens:

B9,B4,B13,B27,B6,B13,B29 ## sequence of 7 observationsThe underlying Viterbi path might be:

B9 B4 B13 B27 B6 B13 B29 ## observations

/ / / / / / /

UrnD-->UrnA-->UrnF-->UrnA-->UrnG-->UrnF-->UrnN ## hidden statesThe Viterbi algorithm infers the robot's hidden state transitions (urn picks) from the labels observed on the conveyor belt. The inference is, of course, statistical in nature. There is guessing, but the Viterbi algorithm should make this of the educated flavor.

3. The Viterbi algorithm in detail

The Viterbi algorithm uses six inputs to generate the Viterbi path:

- The observation space consists of the possible output tokens or observations (e.g., B1, B2, B3, and so on).

- The sequenced observations is the order in which the tokens are observed (e.g., B9, B4, B13, and so on).

- The state space consists of the possible hidden states (e.g., UrnA, UrnB, and so on).

- The initial probabilities are the likelihoods that a particular urn represents the start state in the robot's selection process.

- The transition probabilities are the likelihoods of a transition from one urn to another, including back to itself.

- The emission probabilities are the likelihoods that an observed ball comes from a specific urn.

The output is:

- The inferred hidden state sequence (in this example, selected urn sequence), which is the Viterbi path.

These inputs can be learned from examples rather than stipulated arbitrarily. Accordingly, there are two sections in the short code example that follows. The first section simulates machine learning from examples, and the second section uses the results from this learning to infer an HMM that underlies the sequence of observed ball labels.

4. The hmm program

The hmm program below is available from my website. Unix-type systems come with Perl installed, but this program requires the Algorithm::Viterbi package that is available at CPAN and other Perl repositories. The optional package Data::Dumper can be used to view the Viterbi inputs in full; to do so, uncomment the program's last line.

Example 1. The hmm program

use strict;

use warnings;

use Algorithm::Viterbi;

use Data::Dumper; ## to view the inputs in full

use constant HowMany => 64_000; # 64K

use constant UrnCount => 5; # five urns

use constant TokenCount => 64; # observations

my @urns = ('UrnA', 'UrnB', 'UrnC', 'UrnD', 'UrnE');

my %balls = ('UrnA' => ['B1', 'B2', 'B4', 'B5', 'B7', 'B8'],

'UrnB' => ['B1', 'B3', 'B5', 'B7'],

'UrnC' => ['B2', 'B3', 'B4', 'B6', 'B7'],

'UrnD' => ['B1', 'B2', 'B3', 'B5', 'B7'],

'UrnE' => ['B2', 'B3', 'B5', 'B8']);

my @data = (); # list of ball-urn pairs for training

sub get_ball_urn_pair { ## return a ball-urn pair or just a ball

my $both = shift;

my $urn = $urns[int(rand(UrnCount))];

my $list = $balls{$urn};

my $i = int(rand(scalar @$list));

return $both ? ($list->[$i], $urn) : $list->[$i];

}

#### execute

## randomly pick an urn and a ball from it

srand(time());

for (my $i = 0; $i < HowMany; $i++) {

my @add = get_ball_urn_pair(1); # random pick

push(@data, \@add);

}

## train the algorithm

my $viterbi = Algorithm::Viterbi->new();

$viterbi->train(\@data);

## generate observations

my @tokens = (); ## observed labels

for (my $i = 0; $i < TokenCount; $i++) {

my $ball = get_ball_urn_pair(0); # random pick

push(@tokens, $ball);

}

## output Viterbi path

my $v_path = ($viterbi->forward_viterbi(\@tokens))[1]; ## Viterbi path

print join('->', @$v_path), "\n";

# print Dumper($viterbi); ## uncomment to see the probabilities in fullThe hmm program uses five urns (UrnA through UrnE), but with different balls in each of the urns:

my %balls = ('UrnA' => ['B1', 'B2', 'B4', 'B5', 'B7', 'B8'],

'UrnB' => ['B1', 'B3', 'B5', 'B7'],

'UrnC' => ['B2', 'B3', 'B4', 'B6', 'B7'],

'UrnD' => ['B1', 'B2', 'B3', 'B5', 'B7'],

'UrnE' => ['B2', 'B3', 'B5', 'B8']);The balls are stored in a map with the urn as the key and a list of contained balls as the value of this key.

The learning section in the hmm program uses randomly generated test data, which consist of 64,000 ball-urn pairs such as this:

B5, UrnD ## evidenceThis pair confirms that UrnD contains a ball labeled B5, but other pairs confirm that a ball B5 could come from some other urn:

B5, UrnA ## more evidenceThe two examples together indicate that a B5 ball could have come from UrnA or UrnD. The learning pairs do not provide any information about the robot except that it could pick a particular ball from a particular urn.

The test data from the learning section are stored in a list, which is passed to the train method of an Algorithm::Viterbi instance:

my $viterbi = Algorithm::Viterbi->new(); ## instantiate

$viterbi->train(\@data); ## pass training dataThe results of this call to train are clarified next.

5. Results of the training

The train method detects five possible states (urns) in the underlying HMM, as each urn occurs at least once within the randomly generated test data. Here are the states in detected order:

states => [UrnA, UrnD, UrnE, UrnB, UrnC]Recall that the three probabilities required for the Viterbi algorithm are the initial probabilities (for the start urn); the transition probabilities (the robot's move from current to next urn); and the emission probabilities (the likelihood that an observed label identifies a ball from a particular urn). The training yields these results for the initial probabilities:

start => {UrnA => 0.199578125,

UrnD => 0.201703125,

UrnE => 0.198546875,

UrnB => 0.200343750,

UrnC => 0.199828125}The values, which sum to 1, give each of the five urns a roughly one-in-five chance of being the robot's initial choice. UrnD has a slight edge over UrnB, but the results are basically a toss-up.

Here are the transition probabilities derived from the tests, with UrnC as the example:

UrnC => {UrnA => 0.198230642762076,

UrnE => 0.192885810970331,

UrnB => 0.204414287942599,

UrnD => 0.205128205128205,

UrnC => 0.198373602314489}With UrnC as the current state, the transition to any urn (including UrnC again) is about 20% probable.

The emission probabilities are interesting in that not every urn has every ball. For example, B6 occurs only in UrnC. Nonetheless, the Viterbi algorithm cannot rule out, on the basis of the training examples, that some other urn might contain this ball as well. The result of training gives only a conservative 20% likelihood that an emitted B6 label originates from a ball in UrnC:

B6 => {UrnC => 0.201110329189147}By contrast, a B7 occurs in four of the urns, and the training data include such occurrences so that there are probabilities for a B7 having originated in each of the four urns:

B7 => {UrnA => 0.160259923275660,

UrnB => 0.244657619716113,

UrnD => 0.197923929041754,

UrnC => 0.201266713581985}From the training data, UrnB is the most likely source of a B7 ball, whereas UrnA is the least likely source of this ball. (UrnA and UrnB both contain a B7 ball.)

In review, the training data support statistical inferences on three key matters:

- The urn that the robot is likely to pick initially. In this case, each urn has about the same chance of being picked.

- Given a picked urn, the urn that the robot is likely to pick next, which might be the same urn again. The training data suggest that all of the urn-to-urn transitions are equally likely.

- The urn from which a particular ball (e.g., B7) has been picked. Here the likelihoods differ significantly because not every urn holds the same balls.

The Viterbi algorithm uses the three input probabilities, and especially the last one on emissions, in trying to determine—from a sequence of observed labels—the sequence of urns from which the balls with the observed labels have been picked.

6. The observations

The final input to the Viterbi algorithm consists of observations—the labels visible on the conveyor belt. The hmm program generates these values randomly, as well:

my @tokens = (); ## observed labels

for (my $i = 0; $i < TokenCount; $i++) {

my $ball = get_ball_urn_pair(0); ## random pick

push(@tokens, $ball);

}With the observations in hand, the final step is to pass them as an argument to the forward_viterbi method, which infers the underlying HMM and returns it as the Viterbi path:

my $v_path = ($viterbi->forward_viterbi(\@tokens))[1]; ## Viterbi pathThe program then outputs the result as a sequence of state transitions:

UrnB->UrnE->UrnE->UrnB->UrnC->...->UrnB->UrnE->UrnB->UrnAOn this sample run, only UrnD fails to appear in the Viterbi path. Every ball in UrnD occurs in at least one other urn, and the transition probabilities do not favor UrnD as a target. The algorithm thus can present a plausible state sequence that excludes UrnD from the HMM.

The forward_viterbi method also returns two probabilities, which represent confidence levels in the inferred Viterbi path. For simplicity, only the Viterbi path is output here.

7. Divide-and-Conquer versus Dynamic-Programming

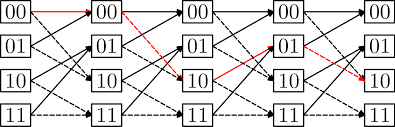

The hmm program is fast, which prompts the question of how the Viterbi algorithm works. The algorithm builds a trellis, which is a graph whose nodes are in vertical slices: higher slices represent earlier times, and lower slices represent later times. Figure 1 is a general example from Wikipedia, with the shortest left-to-right path shown in red:

Figure 1. A trellis

The Viterbi algorithm, starting at a node in the top slice, computes the shortest path through the trellis. This is the Viterbi path, whose nodes represent the HMM states inferred from the observations. The algorithm uses a classical dynamic-programming technique for efficiency. A simpler (and likely more familiar) example clarifies this technique, highlighting the memoization that is so easy in Perl.

Consider a divide-and-conquer approach to computing the Fibonacci numbers, using a recursive fib function as the implementation:

sub fib { ## divide and conquer: exponential time

my ($n) = shift; # read argument

return $n if $n < 2; # base case

return fib($n - 1) + fib($n - 2); # recursive case

}To compute a value such as fib(40), the function divides the problem into the subproblems of fib(39) and fib(38). The divisions continue until a subproblem is small enough to solve directly; in this case, computing the value of either fib(0) or fib(1).

The value of fib(40) is 102,334,155—but the recursive computation requires 331,160,281 calls to the fib function, which underscores the exponential time-complexity of this divide-and-conquer design. The difficulty is that too many values are recomputed over and over again because of the recursive calls with n-1 and n-2 as the arguments. For example, fib(40) calls fib(39) and fib(38); but fib(39) again calls fib(38). Each of the latter two calls results in a call to fib(37), and so on.

A change in design is needed. The fib function as written can be memoized, which thereby changes the design from divide-and-conquer to dynamic-programming. Both designs break a problem down into more manageable subproblems until a subproblem can be solved straightforwardly. The two approaches differ in that dynamic programming caches subproblem solutions so that these can be reused as needed. In short, caching replaces recomputing. The result can dramatically improve efficiency.

A memoized version of fib is easy to get in Perl, requiring no change at all to the original source code:

memoize('fib'); # dynamic-programming: linear time

fib(40); # 41 callsWith the memoized version, fib(40) requires only 41 calls to compute the value. Under the hood, the memoize call provides, as an additional argument to the fib function, a hashtable that stores already computed Fibonacci values; hence, each required Fibonacci value is computed exactly once. The exponential time-complexity of the divide-and-conquer version is reduced to linear time-complexity.

The Viterbi algorithm uses the same caching approach in computing the shortest path through the trellis, a path that represents the HMM. The hmm program is efficient because the Viterbi algorithm is so.

1 Comment