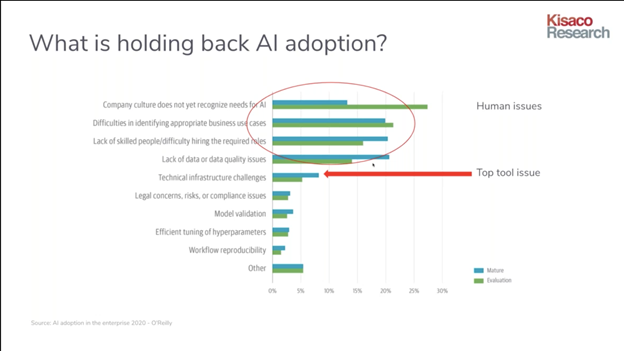

In late 2019, O'Reilly hosted a survey on artificial intelligence (AI) adoption in the enterprise. The survey broke respondents into two stages of adoption: Mature and Evaluation.

When asked what's holding back their AI adoption, those in the latter category most often cited company culture. Trouble identifying good use cases for AI wasn't far behind.

AI adoption in the enterprise 2020 (O'Reilly, ©2020)

MLOps, or machine learning operations, is increasingly positioned as a solution to these problems. But that leaves a question: What is MLOps?

It's fair to ask for two key reasons. This discipline is new, and it's often confused with a sister discipline that's equally important yet distinctly different: Artificial intelligence operations, or AIOps.

Let's break down the key differences between these two disciplines. This exercise will help you decide how to use them in your business or open source project.

What is AIOps?

AIOps is a series of multi-layered platforms that automate IT to make it more efficient. Gartner coined the term in 2017, which emphasizes how new this discipline is. (Disclosure: I worked for Gartner for four years.)

At its best, AIOps allows teams to improve their IT infrastructure by using big data, advanced analytics, and machine learning techniques. That first item is crucial given the mammoth amount of data produced today.

When it comes to data, more isn't always better. In fact, many business leaders say they receive so much data that it's increasingly hard for them to collect, clean, and analyze it to find insights that can help their businesses.

This is where AIOps comes in. By helping DevOps and data operations (DataOps) teams choose what to automate, from development to production, this discipline helps open source teams predict performance problems, do root cause analysis, find anomalies, and more.

What is MLOps?

MLOps is a multidisciplinary approach to managing machine learning algorithms as ongoing products, each with its own continuous lifecycle. It's a discipline that aims to build, scale, and deploy algorithms to production consistently.

Think of MLOps as DevOps applied to machine learning pipelines. It's a collaboration between data scientists, data engineers, and operations teams. Done well, it gives members of all teams more shared clarity on machine learning projects.

MLOps has obvious benefits for data science and data engineering teams. Since members of both teams sometimes work in silos, using shared infrastructure boosts transparency.

But MLOps can benefit other colleagues, too. This discipline offers the ops side more autonomy over regulation.

As an increasing number of businesses start using machine learning, they'll come under more scrutiny from the government, media, and public. This is especially true of machine learning in highly regulated industries like healthcare, finance, and autonomous vehicles.

Still skeptical? Consider that just 13% of data science projects make it to production. The reasons are outside this article's scope. But, like AIOps helps teams automate their tech lifecycles, MLOps helps teams choose which tools, techniques, and documentation will help their models reach production.

When applied to the right problems, AIOps and MLOps can both help teams hit their production goals. The trick is to start by answering this question:

What do you want to automate? Processes or machines?

When in doubt, remember: AIOps automates machines while MLOps standardizes processes. If you're on a DevOps or DataOps team, you can—and should—consider using both disciplines. Just don't confuse them for the same thing.

4 Comments