Dan Tepfer's piano worlds on NPR inspired me to explore my own universe of augmented piano playing. Could I write a program that learns in real time to improvise with my style in the music breaks in my own playing?

The following article is pretty much a play-by-play on making PIanoAI. If you just want to try out PIanoAI, go to the GitHub for instructions and downloading. Otherwise, stay here and learn about my many programming follies.

Inspiration

After watching Dan Tepfer's video on NPR, I grabbed freeze frames of his computer and learned that he uses the Processing software to connect up to his (very fancy) MIDI keyboard.

I quite easily managed to put together something similar with my own (not fancy) MIDI keyboard.

As explained in the video, Dan's augmented piano playing basically allows you to mirror or echo certain notes in specified patterns. Many of his patterns seem to be song-dependent. After thinking about it, I decided I was interested in something a little different: I wanted a piano accompaniment that learns in real time to emulate my style and improvise in the spaces in my own playing on any song.

I'm not the first one to make a piano AI. Google made the A.I. Duet, which is a great program. But I wanted to see if I could make an AI specifically tuned to my own style.

A journey into AI

My equipment is nothing special. I bought it used from a person who gave up trying to learn to play the piano. Pretty much any MIDI keyboard and MIDI adapter will do.

I generally need to do everything at least twice to get it right. I also need to draw everything out clearly to fully understand what I'm doing. My process for writing programs, then, is basically as follows:

- Draw it out on paper.

- Program it in Python.

- Start over.

- Draw it out on paper again.

- Program it in Go.

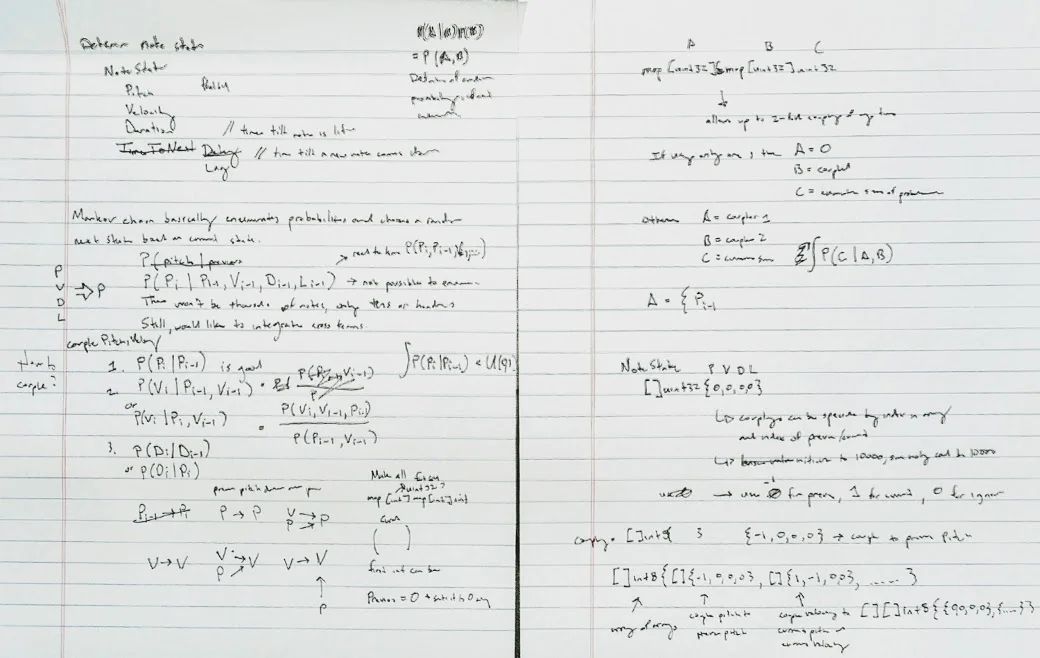

First two pages of 12 for figuring out what I'm doing

Each time I draw out my idea on paper, it takes about three sheets of paper before my idea actually starts to take shape.

Programming a Piano AI in Python

Once I had an idea of what I was doing, I implemented everything in a set of Python scripts. These scripts are built on Pygame, which has great support for MIDI. The idea is fairly simple — there are two threads: a metronome and a listener. The listener simply records notes played by the host. The metronome ticks along and plays any notes in the queue, or asks the AI to send some new notes if none are in the queue.

I made it somewhat pluggable, as you can do variations on the AI so it can be easily outfitted with different piano augmentations. There is an algorithm for simply echoing, one for playing notes within the chord structure (after it determines the chords), and one for generating piano runs from a Markov chain. Here's a movie of me playing with the latter version of this algorithm (when my right hand moves away from the keyboard, the AI begins to play until I play again):

My first piano-playing AI

There were a couple of things I didn't like about this. First, it's not good. At best, the piano AI accompaniment sounds like a small child trying hard to emulate my own playing (I think there are a couple of reasons for this, which basically involve not considering velocity data and transition times).

Second, these Python scripts did not work on a Raspberry Pi; the video was shot with me using Windows. I don't know why. I had trouble on Python 3.4, so I upgraded to 3.6. With Python 3.6, I still had weird problems. pygame.fastevent.post worked, but pygame.fastevent.get did not. I threw up my hands at this and found an alternative.

The alternative is to write this in Go. Go is notably faster than Python, which is quite useful since this is a low-latency application. My ears discern discrepancies of 20 milliseconds, so I want to keep processing times down to a minimum. I found a Go MIDI library so porting was very viable.

Programming a piano AI in Go

I decided to simplify a little. Instead of making many modules with different algorithms, I would focus on the one I'm most interested in: a program that learns in real time to improvise in the spaces in my own playing. I took out more sheets of paper and began.

Day 1

Most of the code is about the same with my previous Python scripts. When writing in Go, I found that spawning threads is much easier than in Python. Threads are all over this program. There are threads for listening to MIDI, threads for playing notes, and threads for keeping track of notes. I was tempted to use the brand-new Go 1.9 sync.Map in my threads, but I realized I could leverage maps of maps, which is beyond the sync.Map complexity. Still, I just made a map of maps that is similar to another sync map store that I wrote (schollz/jsonstore).

I attempted to make everything classy (pun intended), so I implemented components (MIDI, Music, AI) as their own objects with their own functions. So far, the MIDI listening works great and seems to respond quickly. I also implemented playback functions, which also work. This was easy to do.

Day 2

I started by refactoring all the code into folders because I'd like to reserve the New function for each of the objects. The objects have solidified; there is an AI for learning and generating licks, a Music object for the piano note models, a Piano object for communicating with MIDI, and a Player object for pulling everything together.

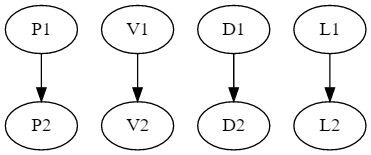

I spent a lot of time with pen and paper figuring out how the AI should work. I realized that there is more than one way to make a Markov chain out of piano notes. Piano notes have four basic properties: pitch, velocity, duration, and lag (time to next note). The basic Markov chain for piano notes would comprise four different Markov chains, one for each property. It would be illustrated like this:

Here is a basic Markov chain for piano properties.

Here, the next pitch for the next note (P2) is determined from the pitch of the previous note (P1). Similar for velocity (V1/V2), duration (D1/D2), and lag (L1/L2). The actual Markov chain simply enumerates the relative frequencies of occurrence of the value of each property and uses a random selector to pick one; however, the piano properties are not necessarily independent. Sometimes there is a relationship between the pitch and velocity, or the velocity and the duration of a note. To account for this, I've allowed for different couplings. You can couple properties to the current or the last value of any other property. Currently I'm allowing only two couplings, because that's complicated enough. But in theory, you could couple the value of the next pitch to the previous pitch, velocity, duration, and lag.

Once I had everything figured out theoretically, I began to implement the AI. The AI is simply a Markov chain, so it determines a table of relative frequencies of note properties and has a function for computing them from the cumulative probabilities and a random number. At the end of the night, it worked! Well, kinda... Here's a silly video of me playing a lick to it and getting AI piano runs:

An example of using a basic Markov chain for piano accompaniment.

Seems like I have more improvements to make tomorrow.

Day 3

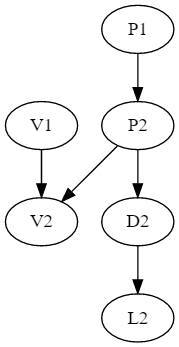

I could find no improvements to make. But maybe the coupling I tried yesterday was not good. The coupling I'm most interested can be visualized like this:

Here is an example of a Markov chain for piano properties, with lots of coupling.

In this coupling, the previous pitch determines the next pitch. The next velocity is determined by both the previous velocity and the current pitch. The current pitch also determines the duration. And the current duration determines the current lag. This needs to be evaluated in the correct order (pitch, duration, velocity, lag), and that's left up to the user, because I don't want to program in tree traversals.

Well, I tried this and it sounded pretty bad. I was massively disappointed in the results. Thinking I might need to try a different machine learning, I decided to make my repo public; maybe someone would find it and take over for me because I wasn't sure I would continue working on it.

Day 4

After thinking about it, I decided to try a neural net (commit b5931ff6), which went badly, to say the least. I tried several variations, too: feeding in the notes as pairs, either each property individually or all the properties as a vector. This didn't sound good; the timing was way off and the notes were all over the place.

I also tried a neural net where I sent the layout of the whole keyboard (commit 20948dfb), followed by the keyboard layout into which it should transition. It sounds complicated, because I think it is, and it didn't seem to work, either.

The biggest problem I noticed with the neural net is that it is hard to get randomness. I tried introducing a random column as a random vector, but it creates too many spurious notes. Once the AI piano lick begins, it seems to get stuck in a local minimum and doesn't explore much beyond that. To make the neural net work, I'd have to do what Google does and try to deep-learn what "melody" is, what a "chord" is, and what a "piano" is. Ugh.

Day 5

I give up.

I did give up. But then, while running in the forest, I couldn't help but rethink the entire project.

What is an AI, really? Is it supposed to play with my level of intelligence? What is my level of intelligence when I play? When I think about my own intelligence, I realize: I'm not very intelligent!

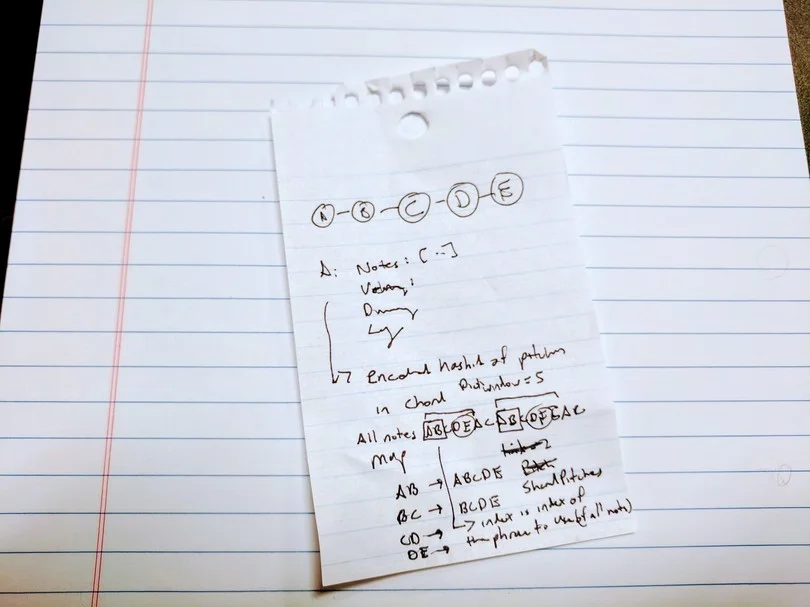

Yes, I'm a simple piano player. I simply play notes in scales that belong to the chords. I have little riffs that I mix in when I feel like it. Actually, the more I think about it, I realize that my piano improvisation is like linking up little riffs that I reuse or copy or splice over and over. So the piano AI should do exactly that.

Here are some more notes to gather my thoughts, but this time on a smaller page.

I wrote that idea down on the requisite piece of paper and went home to program it. Basically, this version of the AI was another Markovian scheme, but greater than first order (i.e., remembering more than just the last note). And the Markov transitions should link up larger segments of notes that are known to be riffs (i.e., based off my playing history). I implemented a new AI for this (commit bc96f512) and tried it out.

Day 6

What the heck! Someone put my Piano AI on Product Hunt today. Oh boy, I'm almost — but not completely — done, so I hope no one tries it today.

I like being on ProductHunt, but I wish I had finished first.

With a fresh mindset, I found a number of problems that were easy to fix:

- a bug where the maps have notes on the wrong beats (I still don't know how this happens, but I check for it now);

- a bug to prevent the AI from improvising while it's improvising;

- a bug to fix concurrent access to a map; and

- a bug to prevent improvisation while learning.

I added command-line flags so it's ready to go — and it actually works! Here are videos of me teaching for about 30 seconds and then jamming:

I got back lots of great feedback on this project. This Hacker News discussion is illuminating; here are notable examples:

- There is a great paper about musical composition by Peter Langston, which has a "riffology" algorithm that seems similar to mine.

- Dan Tepfer seems to be using SuperCollider, not Processing, for procedural music composition.

- Here are some great sequence predictors that could be used to make this better.

If you have more ideas or questions, let me know. Tweet @yakczar.

This article was originally published on Raspberry Pi AI and is reprinted with permission.

2 Comments