Before you can profile slow queries, you need to find them.

MySQL has a built-in slow query log. To use it, open the my.cnf file and set the slow_query_log variable to "On." Set long_query_time to the number of seconds that a query should take to be considered slow, say 0.2. Set slow_query_log_file to the path where you want to save the file. Then run your code and any query above the specified threshold will be added to that file.

Once you know which are the offending queries, you can start exploring what makes them slow. One tool that MySQL offers is the EXPLAIN keyword. It works with SELECT, DELETE, INSERT, REPLACE, and UPDATE statements. You simply prefix the query like this:

EXPLAIN SELECT picture.id, picture.title

FROM picture

LEFT JOIN album ON picture.album_id = album.id

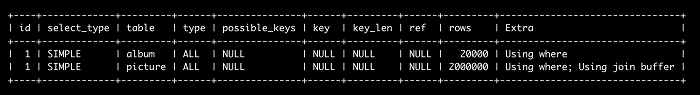

WHERE album.user_id = 1;The result you get is an explanation of how data is accessed. You see a row for each table that was involved in the query:

The important pieces here are the table name, the key used, and the number of rows scanned during the execution of the query.

It scans 2,000,000 pictures, then, for each picture, it scans 20,000 albums. This means that it actually scans 40 billion rows for the album table. However, you can make this process much more efficient.

Indexes

You can significantly increase performance by using indexes. Think of data as being names in an address book. You can either flip through all the pages, or you can pull on the right letter tab to quickly locate the name you need.

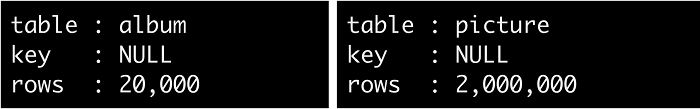

Use indexes to avoid unnecessary passes through tables. For example, you can add an index on picture.album_id like this:

ALTER TABLE picture ADD INDEX(album_id);Now if you run the query, the process no longer involves scanning the entire list of pictures. First, all the albums are scanned to find the ones that belong to the user. After that, the pictures are quickly located using the indexed album_id column. This reduces the number of rows scanned to 200,000. The query is also about 317 times faster than the original.

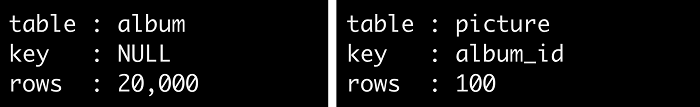

You can make sure that both tables use a key by adding the following index:

ALTER TABLE album ADD INDEX(user_id);

This time, the album table is not scanned in its entirety, but the right albums are quickly pinpointed using the user_id key. When these 100 albums are scanned, associated pictures are pinpointed using the album_id key. Each table uses a key for an optimal performance, making the query 380 times faster than the original.

This doesn't mean that you should add indexes everywhere because each index makes it longer to write to the database. You gain on read but lose on write. So only add indexes that actually increase read performance. Use EXPLAIN to confirm and remove any index that is not used in queries.

There are numerous other ways to increase performance, which you can learn more about at my OSCON talk Speed Up You Database 300 Times.

Anna will be giving the talk Speed Up You Database 300 Times at OSCON 2017 in Austin, Texas. If you're interested in attending the conference use this discount code when you register, for our readers: PCOS.

5 Comments