On a recent trip to Michigan, my friend Tim Sosa mentioned a blog post he read in which the author, Janelle Shane, describes how she built a neural network that generates color names from RGB values. I thought the idea was really neat, but I found the results underwhelming. A lot of the color names were nonsensical (i.e., not actual words), and the pairings between the generated names and the colors seemed pretty random, so it wasn't clear whether the model was actually learning a meaningful function to map colors to language. I wondered whether the idea could be improved, so, exclusively using open source software, I built a model to play around with; the code for the project can be found on my GitHub.

The first thing I noticed about the color data was that there were only about 1,500 named colors, so there's a discrepancy between the data Janelle was using and what I found. (This is an example of why open science is important when doing non-trivial things!) My suspicion is that the model was mostly trying to learn a character-level language model from the data, so regardless of whether there are 1,500 named colors or 7,700, that's definitely not enough words and phrases to learn anything meaningful about the English language.

With that in mind, I decided to build a model that mapped colors to names (and vice-versa) using pre-trained word embeddings. (I used these word2vec embeddings.) The advantage of using pre-trained word embeddings is that the model doesn't have to learn as much about language during training; it just needs to learn how different nouns and colors map to the RGB space and how adjectives should modify colors (e.g., "Analytical Gray"). For more information on word embeddings and why they’re useful, check out my introduction to representation learning.

Using these word embeddings, I built two different models: one that maps a name to a color, and one that maps a color to a name. The model that learned to map names to colors is a straightforward convolutional neural network, and it seems to work pretty well.

Here are examples of what the model considers "red," "green," "blue," and "purple."

opensource.com

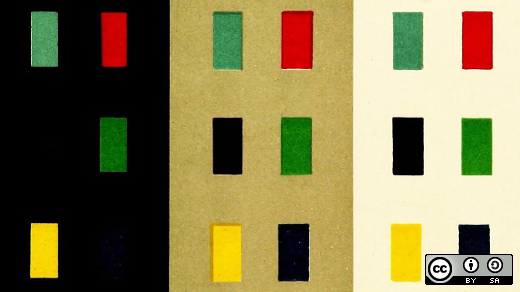

But the model also knows more interesting colors than those. For example, it knows that "deep purple" is a darker version of purple.

opensource.com

It also knows that "ocean" is an aqua color, and that "calm ocean" is a lighter aqua, while "stormy ocean" is a gray purple.

opensource.com

The model that learned to map colors to names is a recurrent neural network that takes RGB values as input and attempts to predict the word embeddings as output. I decided that rather than generate single color names, it would be more interesting/useful to generate candidate words that could then be used to come up with a color name. For example, when providing RGB values of 161, 85, and 130 (a fuchsia-like color) as input to the model, it generates the following:

opensource.com

Word #1 candidates

roses_hydrangeas

lacy_foliage

tussie

sweetly_fragrant

coppery_pink

grevillea

oriental_poppies

maidenhair_ferns

oak_leaf_hydrangea

blue_hydrangeasWord #2 candidates

violet

lavender_flowers

wonderfully_fragrant

fuchsia

anise_hyssop

monarda

purple

purple_blooms

cornflowers

hibiscusesWord #3 candidates

purple

pink

violet

fuchsia

roses

purple_blooms

lavender_flowers

lavender

Asiatic_lilies

monardaWord #4 candidates

roses

purple

pink

red

violet

fuchsia

violets

Asiatic_lilies

purple_blooms

flowersI personally like "sweetly fragrant fuchsia."

Similarly, when providing RGB values of 1, 159, and 11 (a booger-green color) as input to the model, it generates the following:

opensource.com

Word #1 candidates

greenbelt

drainage_easements

annexations

Controller_Bud_Polhill

Annexations

Buttertubs_Marsh

sprigging

easements

buffer_strips

Growth_BoundaryWord #2 candidates

green

jade

blues_pinks

purples_blues

greeny

sienna

plumbago

sage_Salvia

olive

purple_leavedWord #3 candidates

green

plumbago

purples_blues

allamanda

greeny

purple_leaved

lime

hibiscuses

sage_Salvia

blues_pinksWord #4 candidates

green

pineapple_sage

plumbago

purple_leaved

allamanda

hibiscuses

lime

snowberry

sage_Salvia

purples_bluesI personally like "sprigging green."

Anyway, it seems like the word embeddings allow the model to learn pretty interesting relationships between colors and language.

As I've discussed before, the great thing about doing machine learning in the 21st century is that there is an abundance of open source software and open educational materials to get started. This means anyone could have read Janelle's original blog post, had an idea for how to change the model, and been well on their way to implementing the change.

Maybe you have some ideas for how to adapt my model? If so, be sure to link to any forks in the comments!

1 Comment