Mozilla's open source project, Common Voice, is well on its way to becoming the world’s largest repository of human voice data to be used for machine learning. Common Voice recently made its way into Black Duck's annual Open Source Rookies of the Year list.

What’s special about Common Voice is in the details. Every language is spoken differently—with a wide variation of speech patterns, accents, and intonations—throughout the world. A smart speech recognition engine—that has applications over many Internet of Things (IoT) devices and digital accessibility—can recognize speech samples from a diverse group of people only when it learns from a large number of samples. A speech database of recorded speech from people across geographies helps make this ambitious machine learning possible.

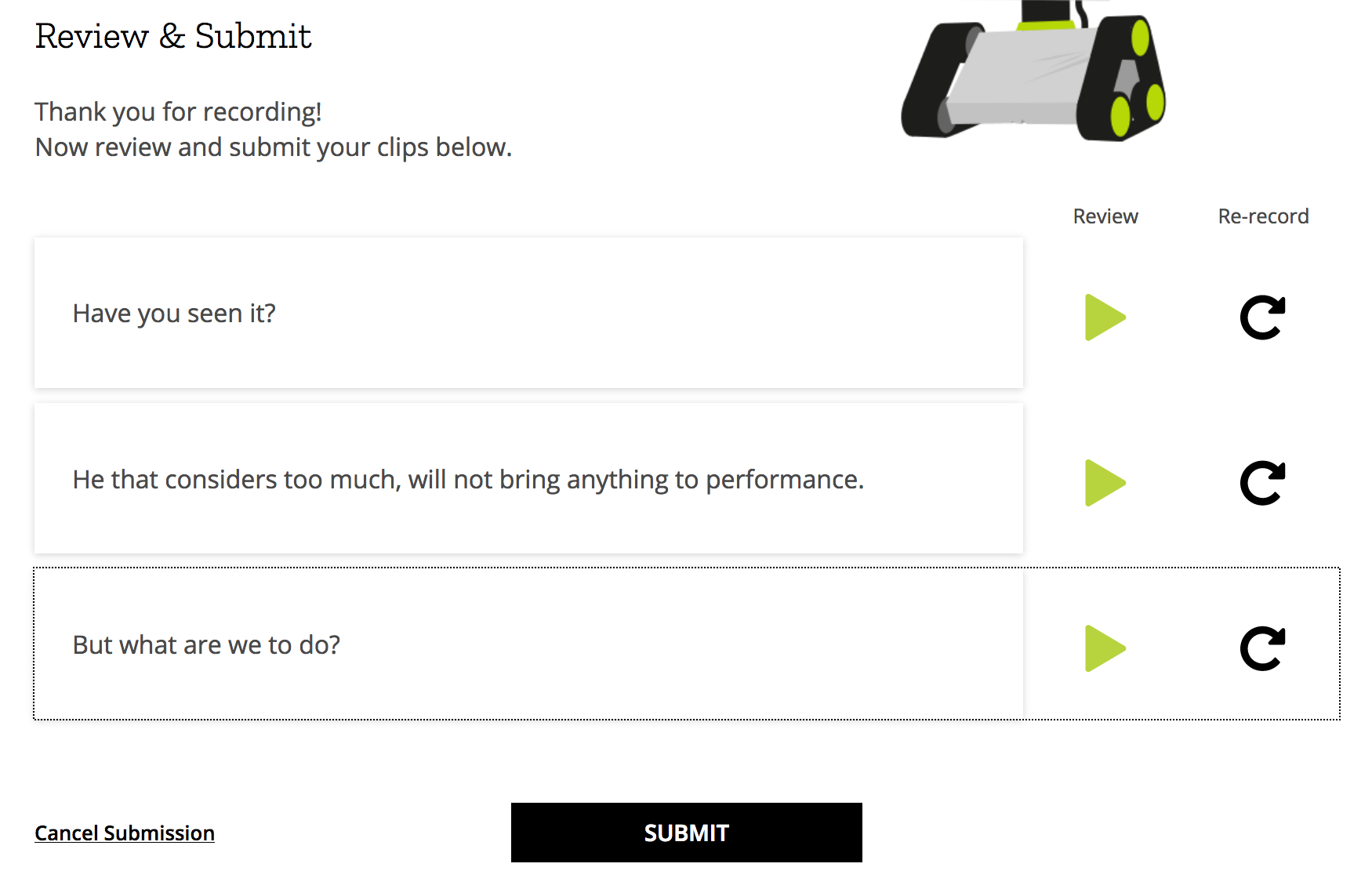

With Common Voice, users record their own voice with a simple tap on a button from the project’s website. The contributor front-end is straightforward—simply go to the project’s site at https://voice.mozilla.org and click on the “Speak up, contribute here!” option. That takes you to the “Speak” page, where you read three consecutive sentences, review (and, if needed, re-record), and save. The saved recording then goes to a voicebank.

The voicebank is currently 12GB in size, with more than 500 hours of English-language voice data that have been collected from 112 countries since the project's inception in June 2017. Though English is the only language currently available, there is scope to expand it to multiple languages this year.

The project aims to collect more than 10,000 hours of CC0-licensed free and open voice data in numerous world languages, which can effectively be used to train machine-learning models for content-based industries—particularly IoT and other speech-dependent applications and organizations. The platform is currently being used to train Mozilla’s TensorFlow implementation of Baidu’s DeepSpeech architecture, and Kaldi, an open source speech recognition toolkit.

Michael Henretty, team lead for the Common Voice project, says, “Common Voice is merely the first step in making speech technology more open and accessible to anyone across the globe.” He hopes that “by collecting and freely sharing this data, we can empower a whole new generation of creators, innovators, entrepreneurs, researchers, and even hobbyists to create amazing voice experiences for whomever they want, wherever they want. “For instance,” Henretty continues, “if someone wanted to create an assistive voice app for blind people who only speak Urdu, we hope to provide the data to make this possible. We believe voice interfaces will soon be everywhere, and with more and more internet-connected devices cropping up in the home, it’s important to make sure no one is getting left behind.”

Mozilla is exploring the Internet of Things with its Web of Things Gateway, Common Voice, and the speech recognition engine, DeepSpeech.

Common Voice is open to contributions—anyone can go to the Speak page and contribute by reading the sentences that appear on the screen. All contributions go to the Data page, which anyone can download at any time for their own use. This page also links to many other similar open datasets. Moreover, plenty of resources are available in the project codebase for developers to use for speech recognition.

Comments are closed.