The appearance of Hadoop and its related ecosystem was like a Cambrian explosion of open source tools and frameworks to process big amounts of data. But companies who invested early in big data found some challenges. For example, they needed engineers with expert knowledge not only on distributed systems and data processing but also on Java and the related JVM-based languages and tools. Another issue was that the system constraints at the time were constantly evolving as new systems appeared to support in-memory processing and continuous data processing (streaming).

Not much has changed today. Once a project wants to move to a new big data execution engine to take advantage of some of the new features, the chances of reuse are low. Most of these projects have different APIs and many incompatible knobs that prevent easy portability and reuse.

Cheaper computing and storage prices and the rise of the cloud democratized the storage and analysis of enormous amounts of data. People with different skills demanded easier and friendlier ways to process this data. Most projects added support for SQL-like languages, but more advanced users like data scientists demanded support for their favorite languages and more importantly, their favorite libraries and tools (which are not necessarily the same available in the Java/Scala world). For example, Python has been established as the lingua franca for data science and most modern machine learning frameworks like Tensorflow and Keras target the language.

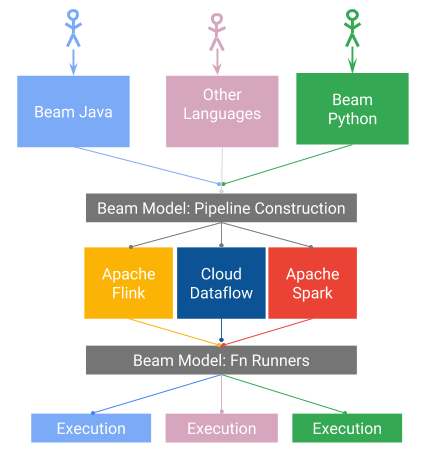

Apache Beam is a unified programming model designed to provide efficient and portable data processing pipelines. The Beam model is semantically rich and covers both batch and streaming with a unified API that can be translated by runners to be executed across multiple systems like Apache Spark, Apache Flink, and Google Dataflow.

Fig 1. The Apache Beam vision. Image by Tyler Akidau and Frances Perry used with authorization.

Beam also tackles the issue of portability at the language level. But this feature has a new set of interesting design challenges:

- How can we define a language specific version of the Beam model (SDK) in a way that feels native to the respective language?

- How can we represent data and data transformations in a language agnostic way?

- How can we guarantee the execution of the different data transformations in isolation?

- How can we control, trace and track the progress of job execution and guarantee its reliability given that we are talking about code in different languages?

- How can we do all of this efficiently?

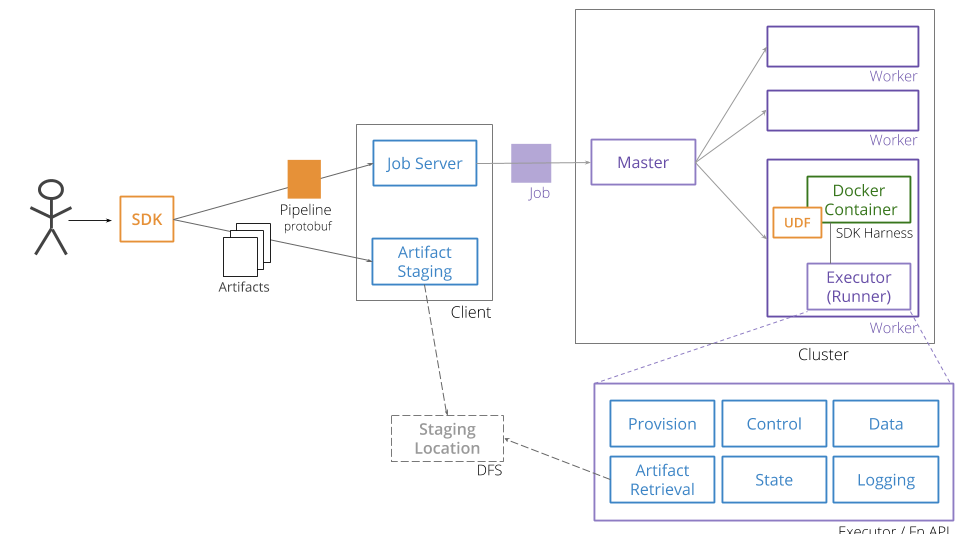

In order to address all these design concerns, Beam has created an architecture with a set of APIs together called the Portability API. They allow representing a data processing pipeline in a language agnostic way with protocol buffers, and a set of services that can be defined and consumed from different languages via gRPC.

Fig 1. The Apache Beam vision. Image by Tyler Akidau and Frances Perry. Used with authorization.

In the portable architecture (Fig. 2), pipeline construction is decoupled from the actual execution environment itself. Users define data pipelines using an SDK in their native language. This representation is translated into a protocol buffer version and sent into a job server that uses a runner to translate the pipeline into the native job. The job is executed in the native system by a combination of a Docker container image with the user code and a SDK harness responsible for executing the user code and interacting with a set of services known together as the Fn API that offer different planes to control the execution of user-defined functions and how data is transferred back and forth from/to the native system into the container, as well as state management and logging.

One nice consequence of the portability architecture is that user code is isolated by a container, avoiding the dependency conflicts that can be a burden on this kind of job. We can eventually define pipelines that contain transforms in different languages, for example, a Java pipeline could benefit from using some machine learning specific transform written in Python, or a Python pipeline could take advantage of reusing existing Input/Output (IO) connectors already written in Java.

This is a new and exciting development in the big data world: the power of modern RPC and data serialization frameworks and containers used together with existing data processing engines to unleash polyglot data processing. These are still early days, and it is an ongoing work in the Apache Beam community. Support for new languages like Go based on this approach is in progress. If you are interested in these ideas and want to follow and contribute to their progress, please join us at the Beam community and take a look at the portability webpage, and if by any chance you are going to be at KubeCon Europe, don't hesitate to come to my talk on Wednesday, May 2 to learn more about Beam and its portability features.

Comments are closed.