Metrics. Measures. How high? How low? How fast? How slow? Ever since the dawn of humankind, we've had an innate and insatiable desire to measure and compare. We started with the Egyptian cubit and the Mediterranean traders' grain in the 3rd millennium BC. Today we have clicks per second, likes, app downloads, stars, and a zillion other ways to measure what we do. Companies spend ridiculously large sums of cash developing fancy KPI dashboards and chasing their needles on a daily—and sometimes hourly—basis, in the quest for the ultimate, ongoing measure of their health. Is it worth it? The answer to this question is as simple as it is annoying and not particularly helpful: It Depends.

In this column, I'm going to take you on a journey into the treacherous yet rewarding world of community metrics. I'll explore existing research, available tools, case studies (good and bad), and ultimately use what I've learned to develop a playbook for you to reference when creating (or improving) your own community metrics. You can then use these metrics to know—or at least approximate—where your community is today, where it's headed, and (spoiler alert!), what to do when that direction isn't quite what you need to reach your community's goals.

Vanity metrics

As a former community manager of a large open source community, I'm well aware of the addiction to and dangers of metrics that sound impressive, but ultimately mean very little. Approximately once a year when analyst firms would send out questionnaires to vendors of commercial open source, I would get this email:

To: James Falkner awesome_community_manager@project

From: CxO That Is Super Busy And Doesn't Have Time To Deal With This

Subject: updated metrics? Hey James, what are the latest stats on our community? I'm filling out this analyst request and need:

- Downloads

- Registrations

- Number of developers

- Number of commits

- Number of forum posts

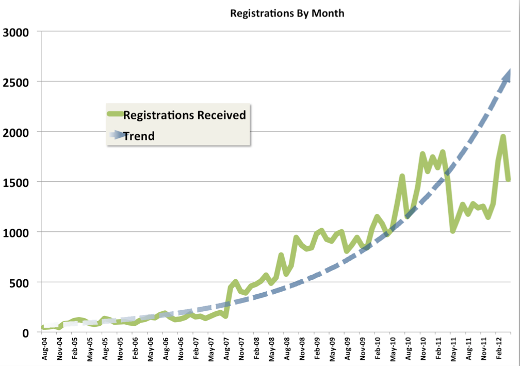

While suppressing my anger and desired response, I would dutifully generate numbers that roughly corresponded to the above. For example, here was the graph I generated for registrations:

In February 2011 we were "dinged" for what was clearly a slowdown in registrations. Something was very wrong, they said. The ship was clearly on fire, they said, and the community manager was at the helm. Not surprisingly, my LinkedIn activity picked up quite a bit that month. So what happened? Funny story—it turns out, in February we enabled a CAPTCHA on our registration form and started blocking spammers rather effectively, drastically depressing the new registration count. A few months later, after the analyst report, spammers figured out a way to get around the CAPTCHA, and things returned to "normal".

Photo by MikeGogulski on Wikipedia, CC BY 2.5

These and other "first order" metrics belong to a class of metrics called vanity metrics, which is an awesome and accurate term describing their superficiality and meaninglessness. Relying on them as a measure of community health is tempting, but taken out of context, they easily can be misleading, gamed/rigged, or even selectively chosen to fit a desired narrative. This was exemplified in a recent episode of HBO’s Silicon Valley, Daily Active Users. In the show, while the company celebrated the 500,000th download of its software platform, the founders realized the more accurate measure of success—Daily Active Users—was shockingly low, so they hired a click farm to game the system and impress investors. The plan backfired and they ultimately had to resolve the underlying usability issues, teaching them (and us) a valuable lesson in vanity metrics.

Ignorance is bliss

So what should you do with vanity metrics? It's OK if you want to collect, analyze, and attempt to draw conclusions from them. I get it, I really do. Just be aware of what you're doing. (I will explore various characteristics of metrics, vanity or otherwise, in future columns.) Overcoming addiction to vanity metrics is possible, but it's much more difficult than the initial effort required to produce it:

The bullshit asimmetry: the amount of energy needed to refute bullshit is an order of magnitude bigger than to produce it.

— Alberto Brandolini (@ziobrando) January 11, 2013

If that's all you have, and you have no other choice but to report them to a demanding exec or an overdue analyst questionnaire, then go for it—but consider using the opportunity to explain why having more accurate metrics that align with the business is better in the long run. And then get to work coming up with better metrics that more accurately tell the story of your open source community and help guide your decision making in the future. See you next month!

5 Comments