Alongside the huge rise in the use of container technology over the past few years, we've seen similar growth in Docker. Because Docker made it easier to create containers than previous solutions, it quickly became the most popular tool for running containers; however, that Docker solved only part of the problem was soon apparent. Although Docker was good for running containers locally on a single machine, it didn't work as well for running software at scale on a cluster. Instead, an orchestration system that helped schedule containers across multiple machines with ease and added the missing bits, such as services, deployments, and ingress, was needed. Projects, old and new, including Mesos, Docker Swarm, and Kubernetes, stepped in to address this problem, but Kubernetes emerged as the most commonly used solution for deploying containers in production.

The Container Runtime Interface (CRI)

Initially, Kubernetes was built on top of Docker as the container runtime. Soon after, CoreOS announced the rkt container runtime and wanted Kubernetes to support it, as well. So, Kubernetes ended up supporting Docker and rkt, although this model wasn't very scalable in terms of adding new features or support for new container runtimes.

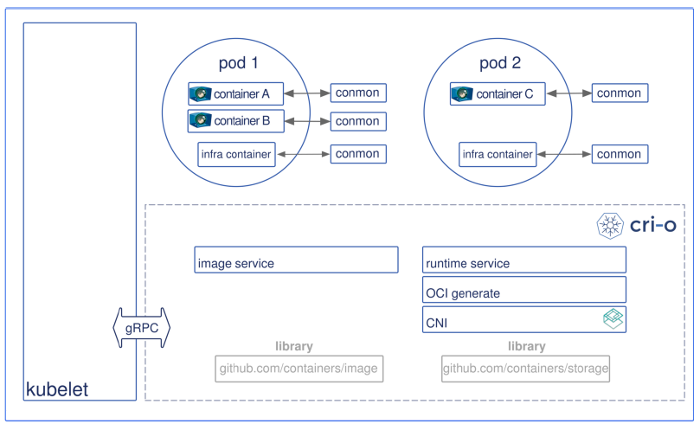

The Container Runtime Interface (CRI) was introduced to fix this problem. The CRI consists of the image service and the runtime service. The idea behind the CRI was to decouple the kubelet (the Kubernetes component responsible for running a set of pods on a local system) from the container runtime using a gRPC API. That enables anyone to implement the CRI as long as they implement all the methods.

Also, there were problems when trying to update Docker versions to work with Kubernetes. Docker was growing in scope and adding features to support other projects, such as Swarm, which weren't necessary for Kubernetes and were causing instability with Docker updates.

What is CRI-O?

The CRI-O project started as a way to create a minimal maintainable runtime dedicated to Kubernetes. It emerged from Red Hat engineers' work on a variety of tools related to containers, like skopeo, which is used for pulling images from a container registry, and containers/storage, which is used to create root filesystems for containers supporting different filesystem drivers. Red Hat has also been involved as maintainers of container standardization through the Open Container Initiative (OCI).

CRI-O is a community driven, open source project developed by maintainers and contributors from Red Hat, Intel, SUSE, Hyper, IBM, and others. Its name comes from CRI and OCI, as the goal of the project is to implement the Kubernetes CRI using standard OCI-based components.

Basically, CRI-O is an implementation of the Kubernetes CRI that allows Kubernetes to use any OCI-compliant runtime as the container runtime for running pods. It currently supports runc and Clear Containers, but in principle any OCI-conformant runtime can be plugged in.CRI-O supports OCI container images and can pull from any compliant container registry. It is a lightweight alternative to using Docker as the runtime for Kubernetes.

The scope of the project is tied to the CRI. Currently the only supported user of CRI-O is Kubernetes. Given this, the project maintainers strive to ensure that CRI-O always works with Kubernetes by providing a stringent and comprehensive test suite. These end-to-end tests are run on each pull request to ensure it doesn't break Kubernetes, and the tests are constantly evolving to keep pace with changes in Kubernetes.

Components

CRI-O is made up of several components that are found in different GitHub repositories.

OCI-compatible runtimes

CRI-O supports any OCI-compatible runtime, including runc and Clear Containers, which are tested using a library of OCI runtime tools that generate OCI configurations for these runtimes.

Storage

The containers/storage library is used for managing layers and creating root filesystems for the containers in a pod. OverlayFS, device mapper, aufs, and Btrfs are implemented, with Overlay as the default driver. Support for network-based filesystem images (e.g., NFS, Gluster, Cefs) is on the way.

Image

The containers/image library is used for pulling images from registries. It supports Docker version 2 schema 1 and schema 2. It also passes all Docker and Kubernetes tests.

Networking

The Container Network Interface (CNI) sets up networking for the pods. Various CNI plugins, such as Flannel, Weave, and OpenShift-SDN, have been tested with CRI-O and are working as expected.

Monitoring

CRI-O's conmon utility is used to monitor the containers, handle logging from the container process, serve attached clients, and detect out of memory (OOM) situations.

Security

Container security separation policies are provided by a series of tools including SELinux, Linux capabilities, seccomp, and other security separation policies described in the OCI specification.

Pod architecture

CRI-O offers the following setup:

opensource.com

The architectural components are broken down as follows:

- Pods live in a cgroups slice; they hold shared IPC, net, and PID namespaces.

- The root filesystem for a container is generated by the containers/storage library when CRI CreateContainer/RunPodSandbox APIs are called.

- Each container has a monitoring process (conmon) that receives the master pseudo-terminal (pty), copies data between master/slave pty pairs, handles logging for the container, and records the exit code for the container process.

- The CRI Image API is implemented using the containers/image library.

- Networking for the pod is setup through CNI, so any CNI plugin can be used with CRI-O.

Status

CRI-O version 1.0.0 and 1.8.0 have been released; 1.0.0 works with Kubernetes 1.7.x. The releases after 1.0 are version matched with major Kubernetes versions, so it is easy to tell that CRI-O 1.8.x supports Kubernetes 1.8.x, 1.9.x will support Kubernetes 1.9.x, and so on.

Try it yourself

- Minikube supports CRI-O.

- It is easy to set up a Kubernetes local cluster using instructions in the CRI-O README.

- CRI-O can be set up using kubeadm; try it using this playbook.

How can you contribute?

CRI-O is developed at GitHub, where there are many ways to contribute to the project.

- Look at the issues and make pull requests to contribute fixes and features.

- Testing and opening issues for any bugs would be very helpful, for example by following the README and testing various Kubernetes features using CRI-O as the runtime.

- Kubernetes' Tutorials is a good starting point to test out various Kubernetes features.

- The project is introducing a command line interface to allow users to play/debug the back end of CRI-O and needs lots of help building it out. Anyone who wants to do some golang programming is welcome to take a stab.

- Help with packaging and documentation is always needed.

Communication happens at #cri-o on IRC (freenode) and on GitHub issues and pull requests. We hope to see you there.

Learn more in Mrunal Patel's talk, CRI-O: All the Runtime Kubernetes Needs, and Nothing More, at KubeCon + CloudNativeCon, which will be held December 6-8 in Austin, Texas.

Comments are closed.