Although there are a vast number of considerations (some common to traditional human-computer interfaces and some unique to spatial environments) that should be taken into account when designing for virtual reality (VR), there's one aspect of user interaction that particularly interests me as a developer: How should VR designers enable users to move within a virtual environment? VR is a spatial computer interface, and interacting with a spatial world means moving within it, which brings unique design challenges and opportunities.

Since the inception of head-mounted VR in the 1960s, virtual reality sickness (falling within the broader category of simulator sickness) has been a problem for VR designers. Multiple factors contribute to the feeling of discomfort/nausea associated with VR sickness. Understanding how our brains perceive and interpret movement in the physical world can help VR designers avoid causing user discomfort in the virtual world. Leveraging all the technical and non-technical tools at our disposal can help us create better user experiences.

Motion-induced VR sickness arises from a conflict between the vestibular and visual systems. While the feeling is similar to car or sea sickness, it is actually the exact reverse. To understand why, let's cover a few quick points about how the human brain senses motion.

Understanding the vestibular system

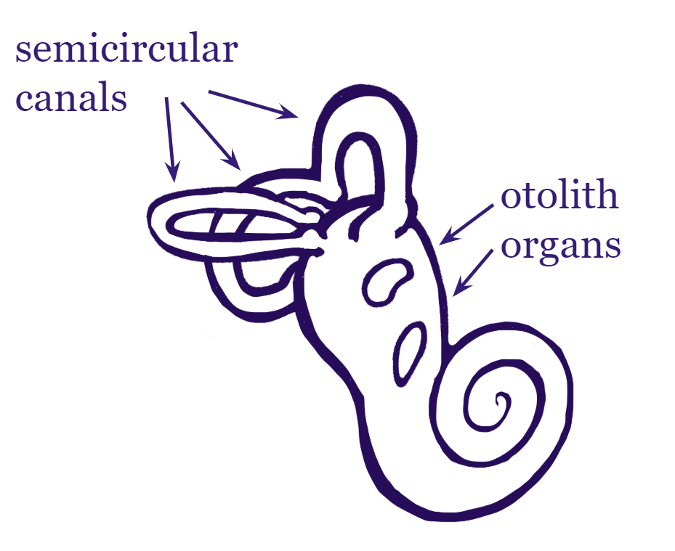

The human vestibular system is located in the inner ear and consists of two main sets of components: Three semicircular canals, which measure angular acceleration along three axes, and two otolith organs, which work in conjunction to measure lateral acceleration along three dimensions.

The "hardware" in the inner ear is similar to the hardware components that allow for motion tracking in virtual reality headsets that are either 3DoF (Three Degrees of Freedom—Rotational Tracking Only) or 6DoF (Six Degrees of Freedom—Rotational and Positional Tracking).

In addition to sensing motion with our vestibular system, we also receive motion cues from our visual field. As children, we begin by relying primarily on our visual system for motion cues. As we mature, we learn to calibrate our vestibular system to match visual stimuli. The sense of motion due to movement within the visual field is called "vection." The paradigmatic example of vection is the feeling you have when you are sitting on a stationary train and you mistake the movement of an adjacent train as your train moving in the opposite direction.

A conflict between the perception of motion provided by our visual and vestibular systems (i.e., when one system tells us we are moving in a certain way and the other system tells us something different) can lead to discomfort and nausea. If we are below deck in a boat on rough seas or are reading a book in the backseat of a car, our vestibular system senses movement without the corresponding visual stimuli and we start to feel sick. We are told to look out at the horizon because it re-establishes parity between our visual and vestibular inputs.

Why does this matter for VR design?

When a VR user undergoes artificial acceleration in a virtual world, they are experiencing the visual sense of motion (vection) without the corresponding vestibular stimuli, which can lead to the discomfort and nausea associated with virtual reality sickness in much the same way as the vestibular-motion induced conflicts described above. The risks of discomfort and nausea from artificial acceleration in VR have steered some developers away from movement solutions that risk discomfort due to perceived acceleration, and toward locomotion solutions such as teleportation that do not involve artificial acceleration.

And yet, in the two-and-a-half years since the release of commercial VR headsets, an increasing number of popular VR applications are implementing various forms of artificial motion that do involve some amount of acceleration. Applications such as competitive VR shooter Onward and the exploratory application Google Earth VR allow users to move by pointing their controllers in the direction they wish to travel. Some VR games such as The Climb and Lone Echo enable users to move by grabbing onto their environment and pulling themselves through it. The competitive racing game Sprint Vector lets users move at a high rate of speed through twisting environments using a combination of arm-swinging, grabbing, and hand-directed gliding. Similarly, the upcoming VR titles Stormland and Population: One allow users to employ a combination of controller-directed movement, climbing, and flying to move throughout their environments.

So, what's happening?

One clue to why these artificial locomotion techniques have been working may lie in how we solve more traditional forms of vection/vestibular conflict. While discussing my experiences with VR locomotion with a PhD audiologist (who also happens to be my mother), she mentioned a tool her patients reported using to manage their own instability resulting from vestibular issues: high-top sneakers.

Why would high-top sneakers help?

In addition to the vestibular and visual systems, our brains also process what is sometimes called somesthetic perception. This includes both the sensation of touch and proprioception (the brain's interpretation of stimuli from the joints and muscles to determine the position and relative movement of one's body in space). In the case of vestibular disorders, the additional somesthetic feedback from the feeling of the high-top sneakers on the patient's ankles could help increase stability despite their unreliable vestibular inputs. This could also be the source of the palliative effect of keeping one or both feet on the ground while lying in bed when suffering from alcohol-induced vestibular instability (the "spins").

Similarly, in the case of VR, artificial locomotion techniques that require users to physically move their bodies to direct artificial movement within the virtual world could help their brains overcome vestibular conflict. When they use their arm to point towards the direction of travel or grab onto virtual objects to pull themselves through a virtual environment, they send somesthetic signals to their brain that match the visual stimuli they are receiving despite insufficient stimuli coming from the vestibular system.

There is also reason to think that novel forms of locomotion are less likely to induce nausea than forms of locomotion that we are already familiar with and have a specific expectation for how they should feel, like walking. With new and unfamiliar forms of locomotion, the brain may defer to input from the visual system because there is no existing vestibular model for how that type of locomotion should feel. Thus, the more novel the type of locomotion (e.g., flying like Superman versus walking up a flight of stairs) the more comfortable it might be for users.

The takeaway

When implementing locomotion within VR applications, designers should be mindful of the ways artificial movement in VR can create vestibular conflict and the effect this can have on users. Designers should take this challenge as an opportunity to be creative and experiment with locomotion schemes. If users are reporting nausea with a form of locomotion, designers can leverage a user's somesthetic cues as input or consider ways of moving through space that are new or unfamiliar. With any type of VR locomotion, there is a wide variety of sensitivities among users. Designers should take care to test with a diverse range of users and include user-definable comfort options to accommodate persons of all sensitivities.

Mike Harris will present Learning the Rules to Break Them: Designing for the Future of VR at All Things Open, October 21-23 in Raleigh, North Carolina.

1 Comment