Machine learning has completely transformed the computing landscape, giving technology entirely new scenarios to address and making existing scenarios far more efficient. However, in order to have a highly efficient machine learning solution, an enterprise must ensure it embraces the following three concepts: composability, portability, and scalability.

3 challenges in machine learning

Composability

When most people hear of machine learning, they often jump first to building models. There are a number of very popular frameworks that make this process much easier, such as TensorFlow, PyTorch, Scikit Learn, XGBoost, and Caffe. Each of these platforms is designed to make data scientists' jobs easier as they explore their problem space.

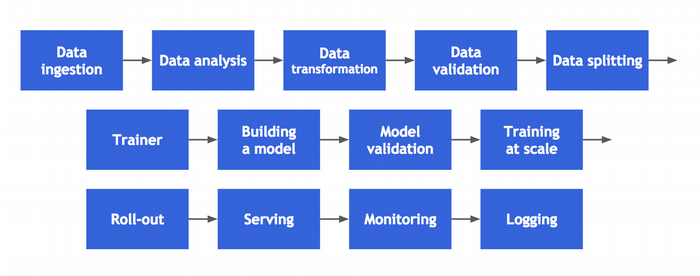

However, in the reality of building an actual production-grade solution, there are many more complex steps. These include importing, transforming, and visualizing the data; building and validating the model; training the model at scale; and deploying the model to production. Focusing only on model training misses the majority of the day-to-day job of a data scientist.

Portability

To quote Joe Beda, "Every difference between dev/staging/prod will eventually result in an outage."

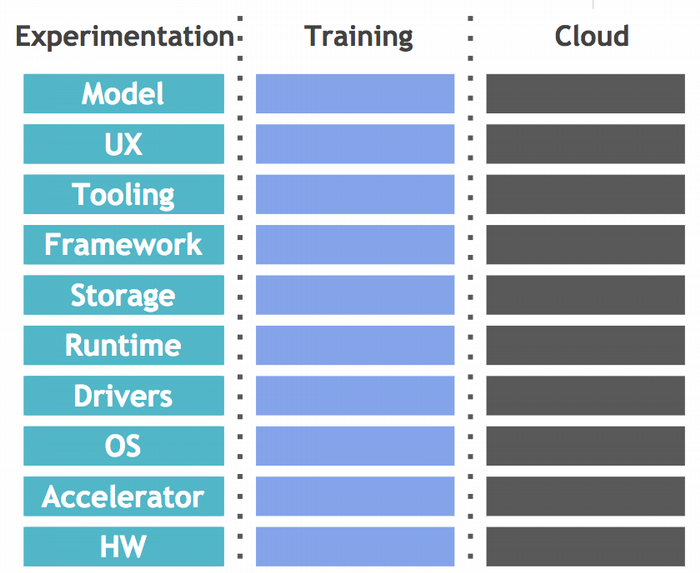

The different steps of machine learning often belong to entirely different systems. To make things even more complicated, lower-level components, such as hardware, accelerators, and operating systems, are also a consideration, which adds to the variation. Without automated systems and tooling, these changes can quickly become overwhelming and challenging to manage. These changes also make it very difficult to get consistent results from repeated experiments.

Scalability

One of the biggest recent breakthroughs in machine learning (deep learning) is a result of the larger scale and capacity available in the cloud. This includes a variety of machine types and hardware-specific accelerators (e.g., graphics processing units/Tensor processing units), as well as data locality for improved performance. Furthermore, scalability is not just about your hardware and software; it is also important to be able to scale teams through collaboration and simplify the running of a large number of experiments.

Kubernetes and machine learning

Kubernetes has quickly become the solution for deploying complicated workloads anywhere. While it started with simple stateless services, customers have begun to move complex workloads to the platform, taking advantage of the rich APIs, reliability, and performance provided by Kubernetes. The machine learning community is starting to utilize these core benefits; unfortunately, creating these deployments is still complicated and requires the mixing of vendors' and hand-rolled solutions. Connecting and managing these services for even moderately sophisticated setups introduces huge barriers of complexity for data scientists who are just looking to explore a model.

Introducing Kubeflow

To address these challenges, the Kubeflow project was created at the end of 2017. Kubeflow's mission is to make it easy for everyone to develop, deploy, and manage composable, portable, and scalable machine learning on Kubernetes everywhere.

Kubeflow resides in an open source GitHub repository dedicated to making machine learning stacks on Kubernetes easy, fast, and extensible. This repository contains:

- JupyterHub for collaborative & interactive training

- A TensorFlow training custom resource

- A TensorFlow serving deployment

- Argo for workflows

- SeldonCore for complex inference and non-TensorFlow Python models

- Reverse proxy (Ambassador)

- Wiring to make it work on any Kubernetes anywhere

Because this solution relies on Kubernetes, it runs wherever Kubernetes runs. Just spin up a cluster and go!

Let's say you are running Kubernetes with OpenShift, here is how you can start using Kubeflow. (You can also review the Kubeflow user guide.)

# Get ksonnet from https://ksonnet.io/#get-started

# Get oc from https://www.openshift.org/download.html

# Create a namespace for kubeflow deployment

oc new-project mykubeflow

# Initialize a ksonnet app, set environment default namespace

# For different kubernetes api versions see 'oc version'

ks init my-kubeflow --api-spec=version:v1.9.0

cd my-kubeflow

ks env set default --namespace mykubeflow

# Install Kubeflow components, for a list of version see https://github.com/kubeflow/kubeflow/releases

ks registry add kubeflow github.com/kubeflow/kubeflow/tree/v0.1.0/kubeflow

ks pkg install kubeflow/core@v0.1.0

ks pkg install kubeflow/tf-serving@v0.1.0

ks pkg install kubeflow/tf-job@v0.1.0

# Create templates for core components

ks generate kubeflow-core kubeflow-core

# Relax OpenShift security

oc login -u system:admin

oc adm policy add-scc-to-user anyuid -z ambassador -nmykubeflow

oc adm policy add-scc-to-user anyuid -z jupyter-hub -nmykubeflow

oc adm policy add-role-to-user cluster-admin -z tf-job-operator -nmykubeflow

# Deploy Kubeflow

ks apply default -c kubeflow-coreTo connect to JupyterHub locally, simply forward to a local port and connect to https://127.0.0.1:8000.

To create a TensorFlow training job:

# Create a component

ks generate tf-job myjob --name=myjob

# Parameters can be set using ks param e.g. to set the Docker image used

ks param set myjob image https://gcr.io/tf-on-k8s-dogfood/tf_sample:d4ef871-dirty-991dde4

# To run your job

ks apply default -c myjobTo serve a TensorFlow model:

# Create a component for your model

ks generate tf-serving serveInception--name=serveInception

ks param setserveInception modelPath gs://kubeflow-models/inception

# Deploy the model component

ks apply default -c serveInceptionWhat's next?

Kubeflow is in the midst of building out a community effort and would love your help! We have already been collaborating with many teams, including CaiCloud, Red Hat & OpenShift, Canonical, Weaveworks, Container Solutions, Cisco, Intel, Alibaba, Uber, and many others. Reza Shafii, senior director, Red Hat, explains how his company is already seeing Kubeflow's promise:

"The Kubeflow project was a needed advancement to make it significantly easier to set up and productionize machine learning workloads on Kubernetes, and we anticipate that it will greatly expand the opportunity for even more enterprises to embrace the platform. We look forward to working with the project members."

If you would like to try out Kubeflow right now in your browser, we've partnered with Katacoda to make it super easy.

You can also learn more about Kubeflow from this video.

And we're just getting started! We would love for you to help.

- Join the Slack channel

- Join the kubeflow-discuss email list

- Subscribe to the Kubeflow Twitter account

- Download and run Kubeflow, and submit bugs

Comments are closed.