If you've been following the artificial intelligence (AI) news media lately, you probably heard that one of Google's top AI people, Ian Goodfellow, moved to Apple in March. Goodfellow was named one of MIT's Innovators Under 35 in 2017 and one of Foreign Policy's 100 Global Thinkers in 2019. He is also known as the father of the machine learning concept called the generative adversarial network (GAN). According to Yann LeCun, the director of Facebook AI, GAN is the "most interesting idea in the last 10 years of machine learning."

Nearly everyone is aware of how hot the field of machine learning is now. Google is doing machine learning. Amazon is doing machine learning. Facebook is doing machine learning. Every company on the planet wants to do something with machine learning. Mark Cuban, an American businessman, investor (including on the Shark Tank reality TV show), and owner of the Dallas Mavericks NBA team, recently said everyone should learn machine learning.

If machine learning is something we should learn and GAN is the hottest idea in machine learning, it stands to reason that it's a good idea to learn more about GAN.

There are plenty of interesting articles, videos, and other learning materials available on the internet that explain GAN. This article is a very introductory guide to GAN and some of the open source projects and resources where you can expand your knowledge about it and machine learning in general.

If you want to jump in quickly, I highly recommend beginning with Stanford University's lecture on Generative Models on YouTube. Also, Ian Goodfellow's original paper, "Generative Adversarial Networks" is downloadable as a PDF. Otherwise, continue reading for some of the background information and other tools and resources that should help you.

Statistical classification models

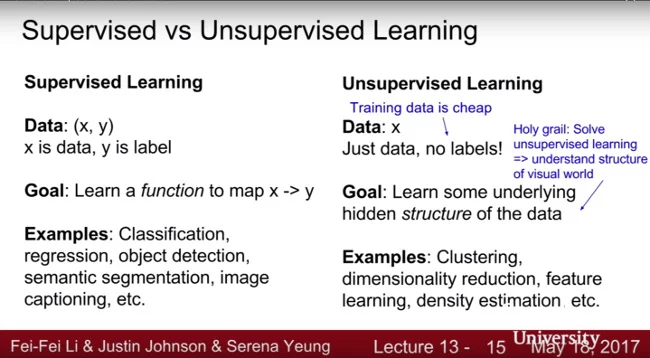

Before learning about GAN, it is important to know the differences between the models of statistical classification that are widely used in machine learning and statistics. In statistical classification, which is an area of study seeking to identify where a new observation belongs among a set of categories, there are two main models: the discriminative model and the generative model. The discriminative model belongs to supervised learning and the generative model belongs to unsupervised learning. This slide from Stanford's lecture explains the difference:

Discriminative models

The discriminative model tries to identify where a particular new item belongs in a category based on the features discovered from the new item. For example, let's say we want to create a very simple discriminative model system that will assign a given piece of dialogue to a particular movie category, and, in this case, whether it is from the movie The Avengers. Here is a line of sample dialogue:

"He's part of the Avengers, and the group will do everything in its power to keep the world safe. Loki better watch out."

Our pre-defined Avengers keywords group could include "Avengers" and "Loki." Our discriminative model algorithm will try to match the keywords associated with The Avengers from the given text and report the probability between 0.0 and 1.0, where a number closer to 1 indicates that the dialogue is most likely from The Avengers and a number closer to 0 indicates that it is not.

This was an extremely simple example of a discriminative model, but you probably get the idea. You can see that the discriminative model is heavily dependent on the quality of pre-defined categories but makes just a few assumptions.

Generative models

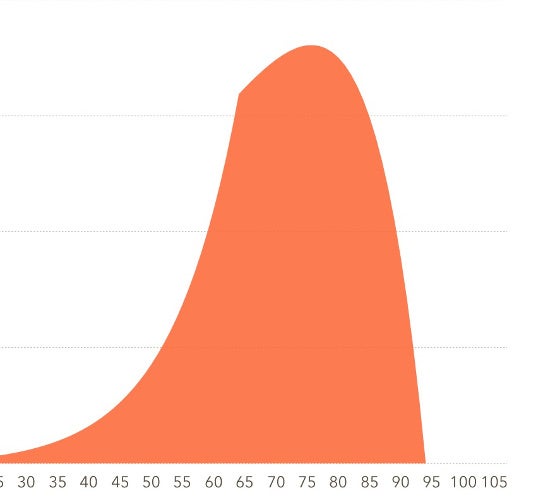

The generative model, instead of labeling the data, works by trying to draw some insight from a certain data set. If you remember the Gaussian distribution you probably learned in high school, you might get the idea right away.

For example, let's say this is the list of final exam scores in a class of 100 students.

- 10 students got 80% or above

- 80 students got 70% to 80%

- 10 students got 70% or lower

From this data, we can infer that most students scored in the 70s. This insight is based on the statistical distribution of a certain data set. If we see other patterns, we can also gather that data and make other inferences.

Generative models you may have heard of include the Gaussian mixture model, Bayesian models, the Hidden Markov model, and the variational encoder model. GAN also falls into the generative model but with a twist.

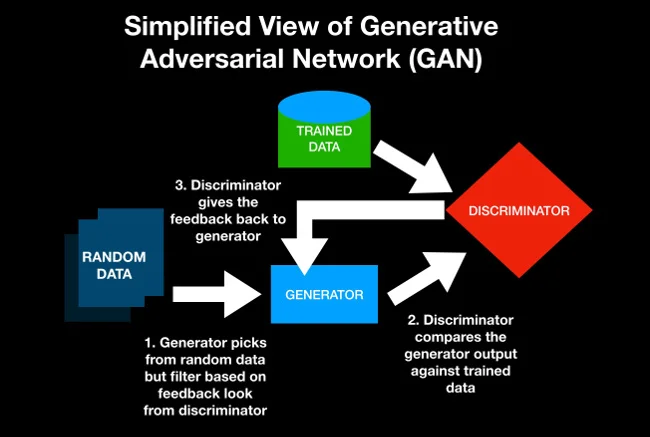

GAN's model

GAN attempts to combine the discriminatory model and the generative model by randomly generating the data through the generative model, then letting the discriminative model evaluate the data and use the result to improve the next output.

Here is a simplified view of GAN:

GAN's real implementation is much more complicated than this, but this is a general idea.

The easiest way to understand GAN is to think of a scenario where a detective and a counterfeiter are playing a repetitive guessing game where the counterfeiter tries to create a forgery of a $100 bill and the detective judges whether each item is real or fake. Here is a hypothetical dialogue of this scenario:

Counterfeiter: "Does this $100 look like real money?"

Detective: "No! That does not look like a real $100 bill at all."

Counterfeiter [holding a new design]: "Does this $100 bill look like real money?"

Detective: "That is better but still not quite like real."

Counterfeiter [holding a variation of the design]: "What about now?"

Detective: "That is very close but still not like real."

Counterfeiter [holding yet another variation]: "This should be like real, right?"

Detective: "Yes! That is very close!"

OK. So, the detective is actually a partner-in-crime. In this example, the counterfeiter is the generator while the detective is the discriminator. As the play is iterated over and over, the probability that the forged currency is similar to the real currency likely gets better and better.

The primary difference between the counterfeiter–detective example and GAN is that the main purpose of GAN is not to create a 100% real output where there can be one and only one answer. Instead, you can think of GAN as some sort of super-creative engine that can generate many unexpected outputs that can surprise you but also satisfy your curiosity and expectations.

Machine learning

Before you start using GAN, you need some background knowledge on machine learning and the mathematics behind it.

If you are new to machine learning, you can access Goodfellow's Deep Learning textbook for free or buy a hard copy through the Amazon link from his website if you prefer.

You can also watch Stanford's lectures on Convolutional Neural Networks for Visual Recognition on YouTube.

However, the best way to start working with machine learning is probably by taking free courses through Massive Open Online Courses (MOOCs), such as:

- Machine Learning by Stanford on Coursera

- Introduction to TensorFlow for Artificial Intelligence, Machine Learning, and Deep Learning by Deeplearning.ai on Coursera

- Machine Learning by Columbia University on EdX

- Machine Learning with Python: from Linear Models to Deep Learning by MIT on EdX

- Machine Learning Crash Course by Google

- Introduction to Machine Learning Problem Framing by Google

- Data Preparation and Feature Engineering in ML by Google

Since data science compliments machine learning, learn about Kaggle, the most famous online community of data scientists and machine learners

And you will also want to become familiar with the most popular machine learning tools:

Also, be sure to check out other Opensource.com articles about machine learning:

- An introduction to machine learning today

- Using machine learning to color cartoons

- Using machine learning to name colors

- Top 3 machine learning libraries for Python

- Machine learning with Python: Essential hacks and tricks

Get started with GAN

Now that we understand some basics about machine learning and GAN, you may be ready to start using GAN. First, access Goodwell's original GAN code on GitHub under the BSD 3-Clause "New" or "Revised" License.

There are also several variations of GAN:

- Torch implementation

- Deep convolutional generative adversarial networks (DCGANN)

- Cycle-consistent adversarial networks (CCAN)

Ways you can use GAN

There are limitless possible use cases for GAN, but here are some ways people have experimented with it:

- Font generation: zi2zi

- Anime character generation: animeGAN

- Interactive image generation: iGAN

- Text-to-Image: TAC-GAN and "Generative Adversarial Text to Image Synthesis"

- 3D object generation: pix2vox

- Image editing: IcGAN

- Video generation: Adversarial Video Generation and "Unsupervised Learning of Visual Structure using Predictive Generative Networks"

- Music generation: "MidiNet: A Convolutional Generative Adversarial Network for Symbolic-domain Music Generation"

Have you used GAN? Do you have any other resources to share? If so, please leave a note in the comments.

Comments are closed.