You've made the switch to Linux containers. Now you're trying to figure out how to run containers in production, and you're facing a few issues that were not present during development. You need something more than a few well-prepared Dockerfiles to move to production. What you need is something to manage all of your containers: a container orchestration system.

The problem

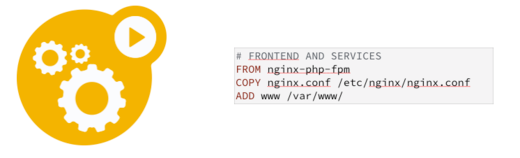

Let's look at a relatively simple application, a simple database-driven web application using PHP and MySQL. Your first pass at a solution might be to split up all the HTTP responsibilities, like serving the API and the frontend user interface (UI) into one container, and all of the backend storage into a second container.

You now have something that looks like these two images:

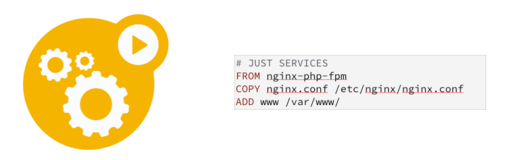

If you need to scale out this architecture it's pretty easy, and you can add more copies of the frontend containers. The next two images show a single frontend with a single backend, and multiple frontends with a single backend:

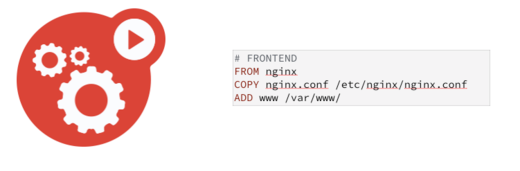

Now someone comes along and tells you that this isn't good microservice architecture. And they would be right because you really need to split up responsibilities even more. Serving up a PHP-driven API and a HTML/JS/CSS UI from the same container is not a best practice. It is better to split them up into two containers:

- PHP and NGINX serving up the API

- NGINX serving up the HTML/JS/CSS

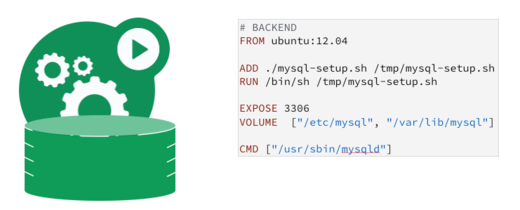

Someone else comes along and points out that having a MySQL persistent data store run on an ephemeral container is suboptimal, because when the container goes down you lose your data. To solve this, you can add a persistent storage volume to a container that lives outside the container. The container running MySQL can go down and not take your data with it.

Now you can scale up your container solution and use more containers to handle greater loads.

Now you have a great container solution, capable of handling large amounts of traffic, but it is based on a lie. Well, a lie might be a little unfair, so let's say it is based on an abstraction. The containers are still running on a machine with finite resources, so we want that work to be split among multiple machines. What if one of those machines goes down and you need to move containers?

Now you've added a whole lot of complexity to your setup, but you still have the underlying complexity of having to maintain individual machines, deal with uptime, and moving resources around.

The solution: Container orchestration

You would love to have some sort of system to manage all of the bare metal machines or virtual machines that you need to run your containers on. You would also love it if that system could manage your containers, launching them on the underlying machines, making sure they are distributed, and keeping them healthy. What I have described here is referred to as a container orchestration system. And, Kubernetes is one such system.

There are two concepts that are very important when discussing containers and Kubernetes.

Ephemeral computing

Servers go down. Stacks overflow. The one-in-a-million problem happens when you serve millions of requests a day. Instead of trying to chase ever-elusive nines, you should design your applications to handle a process going down. So you should expect anything running in a container to just go away, and to be replaced by another fresh version of that container.

There are a lot of metaphors that are used for this, but the most common is "Pets vs. Cattle." Pets are servers that you treat like your household dog, cat, rabbit, or minipig. You name them, you care for them as individuals, and when they get sick in the middle of the night you nurse them back to health. You don't want that with containers. Instead, you want servers that you can treat like cattle: run them in large numbers, don't bother with individual names, and if one gets sick in the middle of the night, you make hamburgers. (Actually, don't eat a sick cow. That's how you catch incurable diseases—make a leather coat instead.)

Desired state

Desired state is a counterpoint to what we would call imperative-style instruction, which is just a long way of saying scripting. When we write scripts things happen because we list out specific steps and wait for them to complete. With that you can create new:

- images with code updates

- SQL databases

- API servers

- frontend servers

If one of the steps fail in your process, the process stops and your application doesn't launch. If one of those machines goes down after startup, someone or something has to re-launch it.

Desired state, or declaratives, instead say: "Describe what setup you want and the system will make it happen." So instead of writing out steps, you say:

- I want 1 replica of the database.

- I want 2 replicas of API servers.

- I want 3 replicas of frontend servers.

The declarative system ensures that you always have that many replicas running. If one goes down, it restarts another one. If too many are running, it kills the extras. Now you have to change your app a bit. If the API servers come up before the SQL database, you might run into SQL connection errors. You want to make sure to add progressive retries to the system. But these changes are straightforward, and in many cases already best practices.

Kubernetes for orchestration

Kubernetes is an open source container orchestration system that runs all of your application containers using a desired state philosophy. Kubernetes was created by Google as an effort to capture the experience our engineers acquired in building our own container orchestration system over the past 10 years, which we called Borg. It is not a port or a conversion of this system. Instead it is greenfield attempt to start fresh, to do things right the first time, and to share our experience. It has benefited from collaboration with Red Hat, CoreOS, IBM, Mesosphere, and Microsoft.

Kubernetes has a lot of components to make it work. Let's explore some of the more important ones.

Containers

Containers are subatomic components in Kubernetes, meaning you never just run a container as they have to run inside a control structure known as a pod. But you can run Docker images based on Dockerfiles exactly the same way you do in Docker. I often have Makefiles that run a container in Docker for development, and then launch to Kubernetes for production.

Pods

Pods are the atomic unit of Kubernetes. The other components of Kubernetes spin up one or more pods, or connect one or more pods to the network. Pods are composed of one or more containers. Containers running in the same pod share disk, localhost, security context, and a few other properties.

Deployments

Deployments are control structures that run one or more pods. Pods by themselves do not have a concept of desired state. A deployment manages the desired state of group of pods by creating replicas. For example, if you need three of this pod there are always three pods running. Deployments also manage updates to pods.

Services

Services connect ephemeral pods to internal or external processes that need to be long-running, like an API endpoint or a MySQL host. Suppose you have three pods running a web server container, and you need a way to route requests from the public Internet to your containers. You can do this by setting up a service that uses a load balancer to route requests from a public IP address to one of the containers. Give the pods a label, say "web server," and then in the service definition say: "Serve port 80 using any container labeled web server". Kubernetes will then use a public load balancer to divide the traffic between the selected containers. This can also be done privately for hosts that you only want to expose to other parts of your Kubernetes application, like a database server.

Wrapping up

In summary, to serve a simple web frontend on Kubernetes you would:

- Configure a Docker image.

- Configure a deployment that references that image.

- Run the deployment to spin up a replica set of pods based on that image.

- Configure a service that references the pods.

- Run the service using a public load balancer.

Kubernetes is by no means the only solution to the problems articulated here. Docker Swarm, Amazon Container Service, and Mesosphere are alternatives. Kubernetes has an active open source contributor community, a robust architecture, the ability to run on many commercial clouds, and it runs on bare metal. I invite you to check out the project and see if it makes sense for you.

I'll be giving a talk on this at DrupalCon Dublin 2016.

Comments are closed.