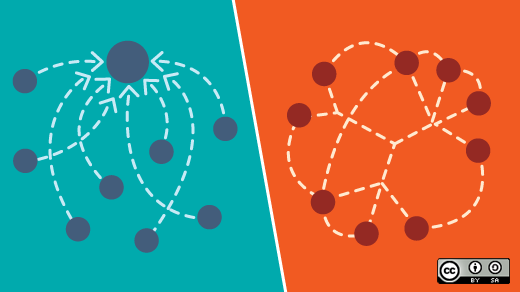

When we talk about the innovation that communities bring to open source software, we often focus on how open source enables contributions and collaboration within communities. More contributors, collaborating with less friction.

However, as new computing architectures and approaches rapidly evolve for cloud computing, for big data, for the Internet of Things (IoT), it's also becoming evident that the open source development model is extremely powerful because of the manner in which it allows innovations from multiple sources to be recombined and remixed in powerful ways. Consider the following examples.

Containers are fundamentally enabled by Linux. As I discussed in more detail recently, all the security hardening, performance tuning, reliability engineering, and certifications that apply to a bare metal or virtualized world still apply in the containerized one. And, in fact, the operating system arguably shoulders an even greater responsibility for tasks such as resource or security isolation than when individual operating system instances provided a degree of inherent isolation.

What's made containers so interesting in their current incarnation—the basic concept dates back over a decade—is that they bring together work from communities such as Docker, that are focused on packaging applications for containers and generally making containers easier to use with complementary innovations in the Linux kernel. It's Linux security features (such as those discussed in this post by Red Hat's Dan Walsh) and resource control such as Control Groups that provide the infrastructure foundation needed to safely take advantage of container application packaging and deployment flexibility. Project Atomic then brings together the tools and patterns of container-based application and service deployment.

We see similar cross-pollination in the management and orchestration of containers across multiple physical hosts; Docker is mostly just concerned with management within a single operating system instance/host. One of the projects you're starting to hear a lot about in the orchestration space is Kubernetes, which came out of Google's internal container work. It aims to provide features such as high availability and replication, service discovery, and service aggregation. However, the complete orchestration, resource placement, and policy-based management of a complete containerized environment will inevitably draw from many different communities.

For example, a number of projects are working on ways to potentially complement Kubernetes by providing frameworks and ways for applications to interact with a scheduler. One such current project is Apache Mesos, which provides a higher level of abstraction with APIs for resource management and scheduling across cloud environments. Other related projects include Apache Aurora, which Twitter employs as a service scheduler to schedule jobs onto Mesos. At a still higher level, cloud management platforms such as ManageIQ extend management across hybrid cloud environments and provide policy controls to control workload placement based on business rules as opposed to just technical considerations.

We see analogous mixing, matching, and remixing in storage and data. "Big data" platforms increasingly combine a wide range of technologies from Hadoop MapReduce to Apache Spark to distributed storage projects such as Gluster and Ceph. Ceph is also the typical storage backend for OpenStack—having first been integrated in OpenStack's Folsom release to provide unified object and block storage.

In general, OpenStack is a great example of how different, perhaps only somewhat-related open source communities can integrate and combine in powerful ways. I previously mentioned the software-defined storage aspect of OpenStack, but OpenStack also embeds software-defined compute and software-defined networking (SDN). Networking's an interesting case because it brings together a number of different communities including Open Daylight (a collaborative SDN project under the Linux Foundation), Open vSwitch (which can be used as a node for Open Daylight), and network function virtualization (NFV) projects that can then sit on top of Open Daylight—to create software-based firewalls, for example.

It's evident that, interesting as individual projects may be, taken in isolation, what's really accelerating today's pace of change in software is the combinations of these many parts building on and amplifying each other. It's a dynamic that just isn't possible with proprietary software.

Discuss this article on Hacker News.

Comments are closed.