The United Nations has proactively researched and promoted open government data across the globe for close to five years now. The Open Data Institute maintains that open data can help "unlock supply, generate demand, and create and disseminate knowledge to address local and global issues." McKinsey & Company report that "seven sectors alone could generate more than $3 trillion a year in additional value as a result of open data."

There is no doubt that open data is an important public policy area—one that is here to stay. Yet, for all the grand promises, scratch beneath the surface and one finds a remarkable paucity of hard empirical facts about what is and isn't happening on the ground—in the real world of cities where most of us increasingly live and work.

Recent results from Citadel-on-the-Move, a 4 million Euro project funded by the European Commission, are beginning to fill this gap, particularly in terms of better understanding existing open data practice (as opposed to best practice theory). The Citadel was grounded in the conviction that the power of open data remains untapped. The project aimed to unleash this power by empowering civil servants and citizens with simple and easy-to-use open source tools to publish and use open data. During the course of this work, The Citadel subsequently engaged over 140 cities from across six continents—uncovering a treasure trove of new findings about the local open data landscape in the process.

Original photo by 21c Consultancy. CC BY-SA 4.0

The first key findings regard the level of open data maturity of cities:

- 17% had no previous contact with open data (no data was publicly available)

- 24% had little experience with open data (some data, but no city portal or systematic release)

- 47% had some experience with open data (a city portal or systematic release, but no clear policy on open data publication and updates)

- 12% had advanced experience with open data (a portal or systematic release and a policy of open data publication & updates)

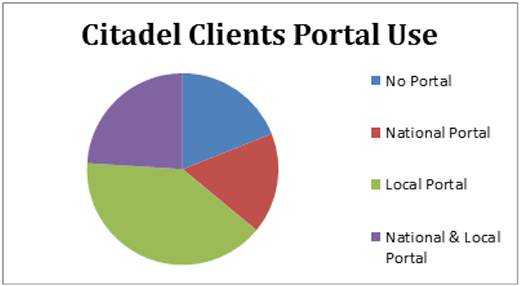

The second key finding regards the use of open data portals or websites where data was made available to the public. 10% of cities had no publicly accessible open data portal. Of those remaining, 17% used a national open data portal, 44% used a local open data portal, and 29% used both local and national portals.

Original photo by 21c Consultancy. CC BY-SA 4.0

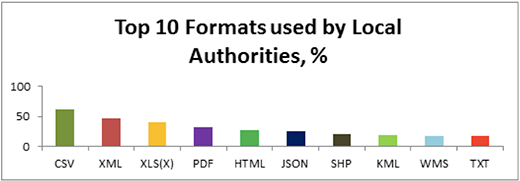

The final key finding regards the formats or file structures used to publish open data. The survey identified 77 distinct formats for publishing, ranging from commonly accepted formats to highly specialized ones used to express specific information types like geographic files. The most common format was CSV (Comma Separated Values), which was used by 62% to publish at least one of their open data files, followed by XML (eXtensible Markup Language), and XLS (Microsoft Excel) at 47% and 40% respectively.

Most cities published in two to three different formats, reflecting the range of information they have to make available. The following figure shows the percentage using the 10 most popular formats:

Original photo by 21c Consultancy. CC BY-SA 4.0

From discussions with more than 100 cities, The Citadel team quickly understood that most public sector data owners do not have a strong grasp of the relative benefits of different data formats. Therefore, all but the most advanced take the path of least resistance, publishing data in the format they already hold. Whilst this practice ignores the greater possibilities of more capable formats, it holds a number of practical implications for international data standards bodies.

As an excellent analysis of data standards by Tim Davies argues, in the early years of open data, many standards advocates, including The Citadel in its initial white paper, championed linked open data (LOD) (an approach that represents data as a series of interconnected links) because its way of representing data, primarily using RDF, can be used to build very advanced models and tools. Through time, however, many came to reject the LOD model because, as our own work on The Citadel discovered, it is highly complex to create and work with and therefore excludes less technically advanced data owners. For a short while, it then began to look as though XML (a format created by W3C) represented a better choice for publishing open data because it contains a strong schema to organize datasets, is easily available to export data from standard city web portals, and provides easy retrieval to applications consuming the data. However, there has likewise been a move away from the XML standard over the past two years due to the difficulty of building the rigid schema into apps.

More recently, the open data community has begun to embrace the notion that simpler tabular data formats offer the best prospect for reuse by the widest possible community, including those for whom the more technically complex formats like XML represent a barrier to entry. In light of this trend toward flat, schema-free data, CSV (Comma Separated Values) has gained popularity in recent years as the best general-purpose format for releasing open data. W3C's Technical Architecture Group is currently working on a draft to provide guidelines on CSV syntax and best practices and has even gone as far as to declare 2014 "The Year of CSV."

The Citadel's work with local authorities across Europe and subsequent findings on data formats supports the W3C position. Whilst certain sections of the technical community, notably those committed to furthering the "Web of Data" vision of Tim Berners-Lee, may still advocate the advanced capabilities of LOD (represented through RDF), Citadel's findings show that this ideal ignores the reality on the ground in four senses:

- City data owners overwhelmingly prefer the simplicity of CSV as a publication format.

- Open data advocates promote CSV for data dumps, as this format provides greater clarity than RDF.

- A growing number of developers prefer CSV tables as this format represent a blank canvas on which they can work more effectively.

- Ordinary citizens wishing to use data can understand CSV files without the advanced technical skills required for RDF or XML.

The trend toward flat datasets like CSV that The Citadel project identified suggests that the less provenance or "baggage" a dataset carries, the more useful it is for all users regardless of technical ability. Data standards advocates would do well to take their lead from this bottom up finding rather than try to impose a technical ideal from above.

& Open Data

A collection of articles about the latest in open government and open data.

1 Comment